[ad_1]

It is nicely established that the tens of 1000’s of GPUs used to coach massive language fashions (LLMs) eat a prodigious quantity of vitality, resulting in warnings about their potential impression on Earth’s local weather.

Nonetheless, based on the Info Expertise and Innovation Basis’s Middle for Information Innovation (CDI), a Washington DC-based assume tank backed by tech giants like Intel, Microsoft, Google, Meta, and AMD, the infrastructure powering AI isn’t a significant menace.

In a latest report [PDF], the Middle posited that lots of the issues raised over AI’s energy consumption are overblown and draw from flawed interpretations of the info. The group additionally contends that AI will doubtless have a optimistic impact on Earth’s local weather by changing much less environment friendly processes and optimizing others.

“Discussing the vitality utilization tendencies of AI programs might be deceptive with out contemplating the substitutional results of the know-how. Many digital applied sciences assist decarbonize the economic system by substituting shifting bits for shifting atoms,” the group wrote.

The Middle’s doc factors to a examine [PDF] by Cornell College that discovered utilizing AI to write down a web page of textual content created CO2 emissions between 130 and 1,500 occasions lower than these created when an American carried out the identical exercise utilizing an ordinary laptop computer – though that determine additionally contains carbon emissions from residing and commuting. A better have a look at the figures, nonetheless, present they omit the 552 metric tons of CO2 generated by coaching ChatGPT within the first place.

The argument might be made that the quantity of energy used to coaching an LLM is dwarfed by what’s consumed by deploying it — a course of known as inferencing — at scale. AWS estimates that inferencing accounts for 90 % of the price of a mannequin, whereas Meta places it at nearer to 65 %. Fashions are additionally retrained sometimes.

The CDI report additionally means that simply as a wise thermostat can scale back a house’s vitality consumption and carbon footprint, AI might obtain comparable efficiencies by preemptively forecasting grid demand. Different examples included utilizing AI to make how a lot water or fertilizer farmers ought to use for optimum effectivity, or monitoring methane emissions from satellite tv for pc information.

In fact, for us to know whether or not AI is definitely making the state of affairs higher, we have to measure it, and based on CID there’s loads of room for enchancment on this regard.

Why so many estimates get it incorrect

Based on the Middle for Information Innovation, this is not the primary time know-how’s vitality consumption has been met with sensationalist headlines.

The group pointed to 1 declare from the height of the dot-com period that estimated that the digital economic system would account for half the electrical grid’s sources inside a decade. Many years later and the Worldwide Vitality Company (IEA) estimates that datacenters and networks account for simply 1-1.5 % of worldwide vitality use.

That’s a beautiful quantity for the Middle’s backers, whose numerous deeds have earned them years of antitrust motion that imperils their social license.

Nevertheless it’s additionally a quantity that’s onerous to take at face worth, as a result of datacenters are advanced programs. Measuring the carbon footprint or vitality consumption of one thing like coaching or inferencing an AI mannequin is subsequently vulnerable to error, the CDI examine contends, with out irony.

One instance highlighted cites a paper by the College of Massachusetts Amherst that estimates the carbon footprint of Google’s BERT pure language processing mannequin. This data was then used to estimate the carbon emissions from coaching a neural structure search mannequin which rendered a results of 626,155 kilos of CO2 emissions.

The findings had been broadly printed within the press, but, a later examine confirmed the precise emissions had been 88 occasions smaller than initially thought.

The place estimates are correct, the report contends that different elements, like the combination of renewable vitality, the cooling tech, and even the accelerators themselves, imply they’re solely actually consultant of that workload at that place and time.

The logic goes one thing like this: In the event you practice the identical mannequin two years later utilizing newer accelerators, the CO2 emissions related to that job may look utterly totally different. This consequently signifies that a bigger mannequin will not essentially eat extra energy or produce extra greenhouse gasses as a byproduct.

There are a couple of causes for this however one among them is that AI {hardware} is getting quicker, and one other is that the fashions that make headlines might not all the time be probably the most environment friendly, leaving room for optimization.

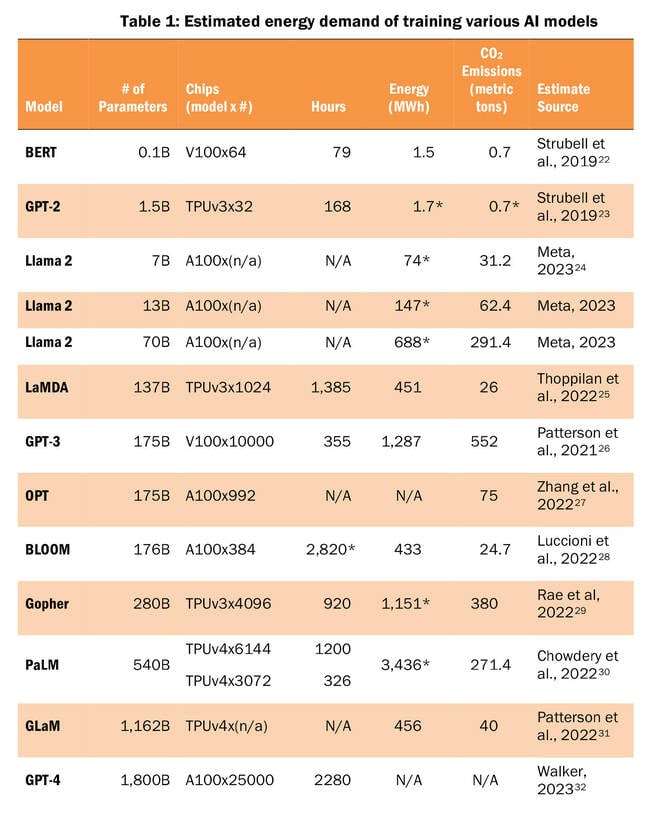

From this chart, we see that extra trendy accelerators, like Nvidia’s A100 or Google’s TPUv4 have a bigger impression on emissions than parameter dimension. – Click on to enlarge

“Researchers proceed to experiment with strategies comparable to pruning, quantization, and distillation to create extra compact AI fashions which can be quicker and extra vitality environment friendly with minimal lack of accuracy,” the creator wrote.

The CID report’s argument seems to be that previous makes an attempt to extrapolate energy consumption or carbon emissions have not aged nicely, both as a result of they make too many assumptions, are primarily based on flawed measurements, or they fail to consider the tempo of {hardware} or software program innovation.

Whereas there’s benefit to mannequin optimization, the report does appear to miss the actual fact Moore’s Regulation is slowing down and that generational enhancements in efficiency aren’t anticipated to carry matching vitality effectivity upticks.

Bettering visibility, avoiding regulation, and boosting spending

The report provides a number of ideas for the way policymakers ought to reply to issues about AI’s vitality footprint.

The primary entails growing requirements for measuring the ability consumption and carbon emissions related to each AI coaching and inferencing workloads. As soon as these have been established, the Middle for Information Innovation means that policymakers ought to encourage voluntary reporting.

“Voluntary” seems to be the important thing phrase right here. Whereas the group says it is not against regulating AI, the creator paints a Catch-22 through which making an attempt to manage the business is a lose-lose state of affairs.

“Policymakers not often contemplate that their calls for can elevate the vitality necessities to coach and use AI fashions. For instance, debiasing strategies for LLMs continuously add extra vitality prices within the coaching and fine-tuning phases,” the report reads. “Equally implementing safeguards to verify that LLMs don’t return dangerous output, comparable to offensive speech, can lead to extra compute prices throughout inference.”

In different phrases, making an attempt to mandate safeguards and also you may make the mannequin extra energy hungry; mandate energy limits and danger making the mannequin much less protected.

Unsurprisingly, the ultimate advice requires governments, together with the US, to put money into AI as a method to decarbonize their operations. This contains using AI to optimize constructing, transportation, and different city-wide programs.

“To speed up the usage of AI throughout authorities companies towards this aim, the president ought to signal an government order directing the Expertise Modernization Fund… embrace environmental impression as one of many precedence funding areas for tasks to fund,” the group wrote.

In fact all of that is going to require higher GPUs and AI accelerators, both bought straight or rented from cloud suppliers. That is excellent news for know-how corporations, which produce and promote the instruments essential to run these fashions.

So it is not stunning, Nvidia was eager to focus on the report in a latest weblog submit. Nvidia has seen its revenues skyrocket in latest quarters as demand for AI {hardware} reaches a fever pitch. ®

[ad_2]