[ad_1]

Graphs are information buildings that characterize advanced relationships throughout a variety of domains, together with social networks, information bases, organic programs, and plenty of extra. In these graphs, entities are represented as nodes, and their relationships are depicted as edges.

The power to successfully characterize and motive about these intricate relational buildings is essential for enabling developments in fields like community science, cheminformatics, and recommender programs.

Graph Neural Networks (GNNs) have emerged as a strong deep studying framework for graph machine studying duties. By incorporating the graph topology into the neural community structure by neighborhood aggregation or graph convolutions, GNNs can be taught low-dimensional vector representations that encode each the node options and their structural roles. This enables GNNs to attain state-of-the-art efficiency on duties resembling node classification, hyperlink prediction, and graph classification throughout numerous software areas.

Whereas GNNs have pushed substantial progress, some key challenges stay. Acquiring high-quality labeled information for coaching supervised GNN fashions might be costly and time-consuming. Moreover, GNNs can battle with heterogeneous graph buildings and conditions the place the graph distribution at check time differs considerably from the coaching information (out-of-distribution generalization).

In parallel, Massive Language Fashions (LLMs) like GPT-4, and LLaMA have taken the world by storm with their unimaginable pure language understanding and era capabilities. Skilled on huge textual content corpora with billions of parameters, LLMs exhibit outstanding few-shot studying talents, generalization throughout duties, and commonsense reasoning expertise that have been as soon as considered extraordinarily difficult for AI programs.

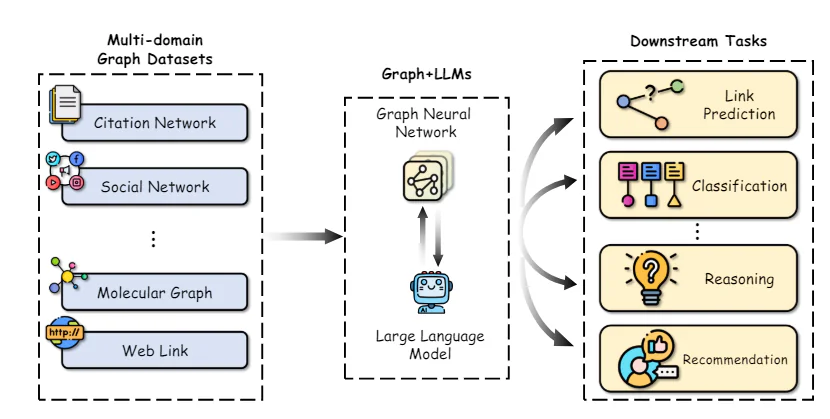

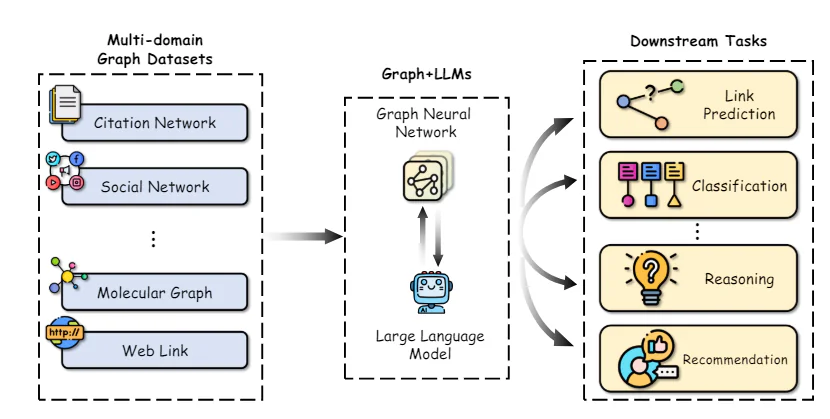

The super success of LLMs has catalyzed explorations into leveraging their energy for graph machine studying duties. On one hand, the information and reasoning capabilities of LLMs current alternatives to reinforce conventional GNN fashions. Conversely, the structured representations and factual information inherent in graphs may very well be instrumental in addressing some key limitations of LLMs, resembling hallucinations and lack of interpretability.

On this article, we’ll delve into the newest analysis on the intersection of graph machine studying and enormous language fashions. We are going to discover how LLMs can be utilized to reinforce numerous points of graph ML, evaluation approaches to include graph information into LLMs, and focus on rising purposes and future instructions for this thrilling subject.

Graph Neural Networks and Self-Supervised Studying

To offer the mandatory context, we’ll first briefly evaluation the core ideas and strategies in graph neural networks and self-supervised graph illustration studying.

Graph Neural Community Architectures

The important thing distinction between conventional deep neural networks and GNNs lies of their means to function instantly on graph-structured information. GNNs observe a neighborhood aggregation scheme, the place every node aggregates function vectors from its neighbors to compute its personal illustration.

Quite a few GNN architectures have been proposed with totally different instantiations of the message and replace features, resembling Graph Convolutional Networks (GCNs), GraphSAGE, Graph Consideration Networks (GATs), and Graph Isomorphism Networks (GINs) amongst others.

Extra lately, graph transformers have gained reputation by adapting the self-attention mechanism from pure language transformers to function on graph-structured information. Some examples embody GraphormerTransformer, and GraphFormers. These fashions are capable of seize long-range dependencies throughout the graph higher than purely neighborhood-based GNNs.

Self-Supervised Studying on Graphs

Whereas GNNs are highly effective representational fashions, their efficiency is usually bottlenecked by the shortage of enormous labeled datasets required for supervised coaching. Self-supervised studying has emerged as a promising paradigm to pre-train GNNs on unlabeled graph information by leveraging pretext duties that solely require the intrinsic graph construction and node options.

Some frequent pretext duties used for self-supervised GNN pre-training embody:

- Node Property Prediction: Randomly masking or corrupting a portion of the node attributes/options and tasking the GNN to reconstruct them.

- Edge/Hyperlink Prediction: Studying to foretell whether or not an edge exists between a pair of nodes, typically based mostly on random edge masking.

- Contrastive Studying: Maximizing similarities between graph views of the identical graph pattern whereas pushing aside views from totally different graphs.

- Mutual Info Maximization: Maximizing the mutual info between native node representations and a goal illustration like the worldwide graph embedding.

Pretext duties like these permit the GNN to extract significant structural and semantic patterns from the unlabeled graph information throughout pre-training. The pre-trained GNN can then be fine-tuned on comparatively small labeled subsets to excel at numerous downstream duties like node classification, hyperlink prediction, and graph classification.

By leveraging self-supervision, GNNs pre-trained on massive unlabeled datasets exhibit higher generalization, robustness to distribution shifts, and effectivity in comparison with coaching from scratch. Nonetheless, some key limitations of conventional GNN-based self-supervised strategies stay, which we’ll discover leveraging LLMs to handle subsequent.

Enhancing Graph ML with Massive Language Fashions

The outstanding capabilities of LLMs in understanding pure language, reasoning, and few-shot studying current alternatives to reinforce a number of points of graph machine studying pipelines. We discover some key analysis instructions on this area:

A key problem in making use of GNNs is acquiring high-quality function representations for nodes and edges, particularly after they include wealthy textual attributes like descriptions, titles, or abstracts. Historically, easy bag-of-words or pre-trained phrase embedding fashions have been used, which frequently fail to seize the nuanced semantics.

Current works have demonstrated the ability of leveraging massive language fashions as textual content encoders to assemble higher node/edge function representations earlier than passing them to the GNN. For instance, Chen et al. make the most of LLMs like GPT-3 to encode textual node attributes, exhibiting important efficiency features over conventional phrase embeddings on node classification duties.

Past higher textual content encoders, LLMs can be utilized to generate augmented info from the unique textual content attributes in a semi-supervised method. TAPE generates potential labels/explanations for nodes utilizing an LLM and makes use of these as extra augmented options. KEA extracts phrases from textual content attributes utilizing an LLM and obtains detailed descriptions for these phrases to reinforce options.

By enhancing the standard and expressiveness of enter options, LLMs can impart their superior pure language understanding capabilities to GNNs, boosting efficiency on downstream duties.

[ad_2]