[ad_1]

I keep in mind the primary time I used the v1.0 of Visible Primary. Again then, it was a program for DOS. Earlier than it, writing packages was extraordinarily advanced and I’d by no means managed to make a lot progress past probably the most primary toy functions. However with VB, I drew a button on the display screen, typed in a single line of code that I wished to run when that button was clicked, and I had an entire utility I might now run. It was such an incredible expertise that I’ll always remember that feeling.

It felt like coding would by no means be the identical once more.

Writing code in Mojo, a brand new programming language from Modular is the second time in my life I’ve had that feeling. Right here’s what it appears like:

Why not simply use Python?

Earlier than I clarify why I’m so enthusiastic about Mojo, I first have to say just a few issues about Python.

Python is the language that I’ve used for almost all my work over the previous couple of years. It’s a lovely language. It has a chic core, on which every thing else is constructed. This strategy implies that Python can (and does) do completely something. Nevertheless it comes with a draw back: efficiency.

Just a few p.c right here or there doesn’t matter. However Python is many hundreds of occasions slower than languages like C++. This makes it impractical to make use of Python for the performance-sensitive elements of code – the inside loops the place efficiency is vital.

Nonetheless, Python has a trick up its sleeve: it could actually name out to code written in quick languages. So Python programmers study to keep away from utilizing Python for the implementation of performance-critical sections, as a substitute utilizing Python wrappers over C, FORTRAN, Rust, and so forth code. Libraries like Numpy and PyTorch present “pythonic” interfaces to excessive efficiency code, permitting Python programmers to really feel proper at residence, at the same time as they’re utilizing extremely optimised numeric libraries.

Almost all AI fashions as we speak are developed in Python, because of the versatile and chic programming language, unbelievable instruments and ecosystem, and excessive efficiency compiled libraries.

However this “two-language” strategy has severe downsides. As an example, AI fashions typically need to be transformed from Python right into a sooner implementation, equivalent to ONNX or torchscript. However these deployment approaches can’t assist all of Python’s options, so Python programmers need to study to make use of a subset of the language that matches their deployment goal. It’s very exhausting to profile or debug the deployment model of the code, and there’s no assure it should even run identically to the python model.

The 2-language downside will get in the best way of studying. As an alternative of having the ability to step into the implementation of an algorithm whereas your code runs, or leap to the definition of a technique of curiosity, as a substitute you end up deep within the weeds of C libraries and binary blobs. All coders are learners (or no less than, they need to be) as a result of the sphere always develops, and no-one can perceive all of it. So difficulties studying and issues for knowledgeable devs simply as a lot as it’s for college students beginning out.

The identical downside happens when making an attempt to debug code or discover and resolve efficiency issues. The 2-language downside implies that the instruments that Python programmers are conversant in not apply as quickly as we discover ourselves leaping into the backend implementation language.

There are additionally unavoidable efficiency issues, even when a sooner compiled implementation language is used for a library. One main situation is the dearth of “fusion” – that’s, calling a bunch of compiled capabilities in a row results in plenty of overhead, as knowledge is transformed to and from python codecs, and the price of switching from python to C and again repeatedly have to be paid. So as a substitute now we have to jot down particular “fused” variations of widespread combos of capabilities (equivalent to a linear layer adopted by a rectified linear layer in a neural web), and name these fused variations from Python. This implies there’s much more library capabilities to implement and keep in mind, and also you’re out of luck if you happen to’re doing something even barely non-standard as a result of there received’t be a fused model for you.

We additionally need to take care of the dearth of efficient parallel processing in Python. These days all of us have computer systems with a lot of cores, however Python usually will simply use one by one. There are some clunky methods to jot down parallel code which makes use of a couple of core, however they both need to work on completely separate reminiscence (and have plenty of overhead to start out up) or they need to take it in turns to entry reminiscence (the dreaded “international interpreter lock” which regularly makes parallel code truly slower than single-threaded code!)

Libraries like PyTorch have been creating more and more ingenious methods to take care of these efficiency issues, with the newly launched PyTorch 2 even together with a compile() operate that makes use of a complicated compilation backend to create excessive efficiency implementations of Python code. Nonetheless, performance like this will’t work magic: there are basic limitations on what’s doable with Python based mostly on how the language itself is designed.

You may think that in apply there’s only a small variety of constructing blocks for AI fashions, and so it doesn’t actually matter if now we have to implement every of those in C. Moreover which, they’re fairly primary algorithms on the entire anyway, proper? As an example, transformers fashions are almost fully applied by a number of layers of two parts, multilayer perceptrons (MLP) and a focus, which will be applied with just some traces of Python with PyTorch. Right here’s the implementation of an MLP:

nn.Sequential(nn.Linear(ni,nh), nn.GELU(), nn.LayerNorm(nh), nn.Linear(nh,ni))…and right here’s a self-attention layer:

def ahead(self, x):

x = self.qkv(self.norm(x))

x = rearrange(x, 'n s (h d) -> (n h) s d', h=self.nheads)

q,okay,v = torch.chunk(x, 3, dim=-1)

s = (q@okay.transpose(1,2))/self.scale

x = s.softmax(dim=-1)@v

x = rearrange(x, '(n h) s d -> n s (h d)', h=self.nheads)

return self.proj(x)However this hides the truth that real-world implementations of those operations are much more advanced. As an example try this reminiscence optimised “flash consideration” implementation in CUDA C. It additionally hides the truth that there are big quantities of efficiency being left on the desk by these generic approaches to constructing fashions. As an example, “block sparse” approaches can dramatically enhance velocity and reminiscence use. Researchers are engaged on tweaks to just about each a part of widespread architectures, and arising with new architectures (and SGD optimisers, and knowledge augmentation strategies, and so forth) – we’re not even near having some neatly wrapped-up system that everybody will use endlessly extra.

In apply, a lot of the quickest code as we speak used for language fashions is being written in C and C++. As an example, Fabrice Bellard’s TextSynth and Georgi Gerganov’s ggml each use C, and because of this are capable of take full benefit of the efficiency advantages of totally compiled languages.

Enter Mojo

Chris Lattner is chargeable for creating most of the tasks that all of us depend on as we speak – even though we would not even have heard of all of the stuff he constructed! As a part of his PhD thesis he began the event of LLVM, which essentially modified how compilers are created, and as we speak kinds the inspiration of most of the most generally used language ecosystems on the earth. He then went on to launch Clang, a C and C++ compiler that sits on prime of LLVM, and is utilized by a lot of the world’s most vital software program builders (together with offering the spine for Google’s efficiency vital code). LLVM contains an “intermediate illustration” (IR), a particular language designed for machines to learn and write (as a substitute of for individuals), which has enabled an enormous neighborhood of software program to work collectively to supply higher programming language performance throughout a wider vary of {hardware}.

Chris noticed that C and C++ nevertheless didn’t actually totally leverage the facility of LLVM, so whereas he was working at Apple he designed a brand new language, referred to as “Swift”, which he describes as “syntax sugar for LLVM”. Swift has gone on to develop into one of many world’s most generally used programming languages, particularly as a result of it’s as we speak the primary method to create iOS apps for iPhone, iPad, MacOS, and Apple TV.

Sadly, Apple’s management of Swift has meant it hasn’t actually had its time to shine outdoors of the cloistered Apple world. Chris led a workforce for some time at Google to attempt to transfer Swift out of its Apple consolation zone, to develop into a alternative for Python in AI mannequin improvement. I used to be very excited about this challenge, however sadly it didn’t obtain the assist it wanted from both Apple or from Google, and it was not finally profitable.

Having stated that, while at Google Chris did develop one other challenge which turned vastly profitable: MLIR. MLIR is a alternative for LLVM’s IR for the trendy age of many-core computing and AI workloads. It’s vital for totally leveraging the facility of {hardware} like GPUs, TPUs, and the vector items more and more being added to server-class CPUs.

So, if Swift was “syntax sugar for LLVM”, what’s “syntax sugar for MLIR”? The reply is: Mojo! Mojo is a model new language that’s designed to take full benefit of MLIR. And likewise Mojo is Python.

Wait what?

OK let me clarify. Perhaps it’s higher to say Mojo is Python++. It will likely be (when full) a strict superset of the Python language. Nevertheless it additionally has extra performance so we are able to write excessive efficiency code that takes benefit of contemporary accelerators.

Mojo appears to me like a extra pragmatic strategy than Swift. Whereas Swift was a model new language packing all types of cool options based mostly on newest analysis in programming language design, Mojo is, at its coronary heart, simply Python. This appears clever, not simply because Python is already properly understood by thousands and thousands of coders, but additionally as a result of after a long time of use its capabilities and limitations are actually properly understood. Counting on the most recent programming language analysis is fairly cool, however its potentially-dangerous hypothesis since you by no means actually know the way issues will prove. (I’ll admit that personally, as an illustration, I typically bought confused by Swift’s highly effective however quirky kind system, and generally even managed to confuse the Swift compiler and blew it up fully!)

A key trick in Mojo is that you could decide in at any time to a sooner “mode” as a developer, through the use of “fn” as a substitute of “def” to create your operate. On this mode, you must declare precisely what the kind of each variable is, and because of this Mojo can create optimised machine code to implement your operate. Moreover, if you happen to use “struct” as a substitute of “class”, your attributes can be tightly packed into reminiscence, such that they will even be utilized in knowledge buildings with out chasing pointers round. These are the sorts of options that enable languages like C to be so quick, and now they’re accessible to Python programmers too – simply by studying a tiny bit of latest syntax.

How is that this doable?

There has, at this level, been a whole lot of makes an attempt over a long time to create programming languages that are concise, versatile, quick, sensible, and straightforward to make use of – with out a lot success. However by some means, Modular appears to have accomplished it. How might this be? There are a few hypotheses we would provide you with:

- Mojo hasn’t truly achieved these items, and the snazzy demo hides disappointing real-life efficiency, or

- Modular is a big firm with a whole lot of builders working for years, placing in additional hours so as to obtain one thing that’s not been achieved earlier than.

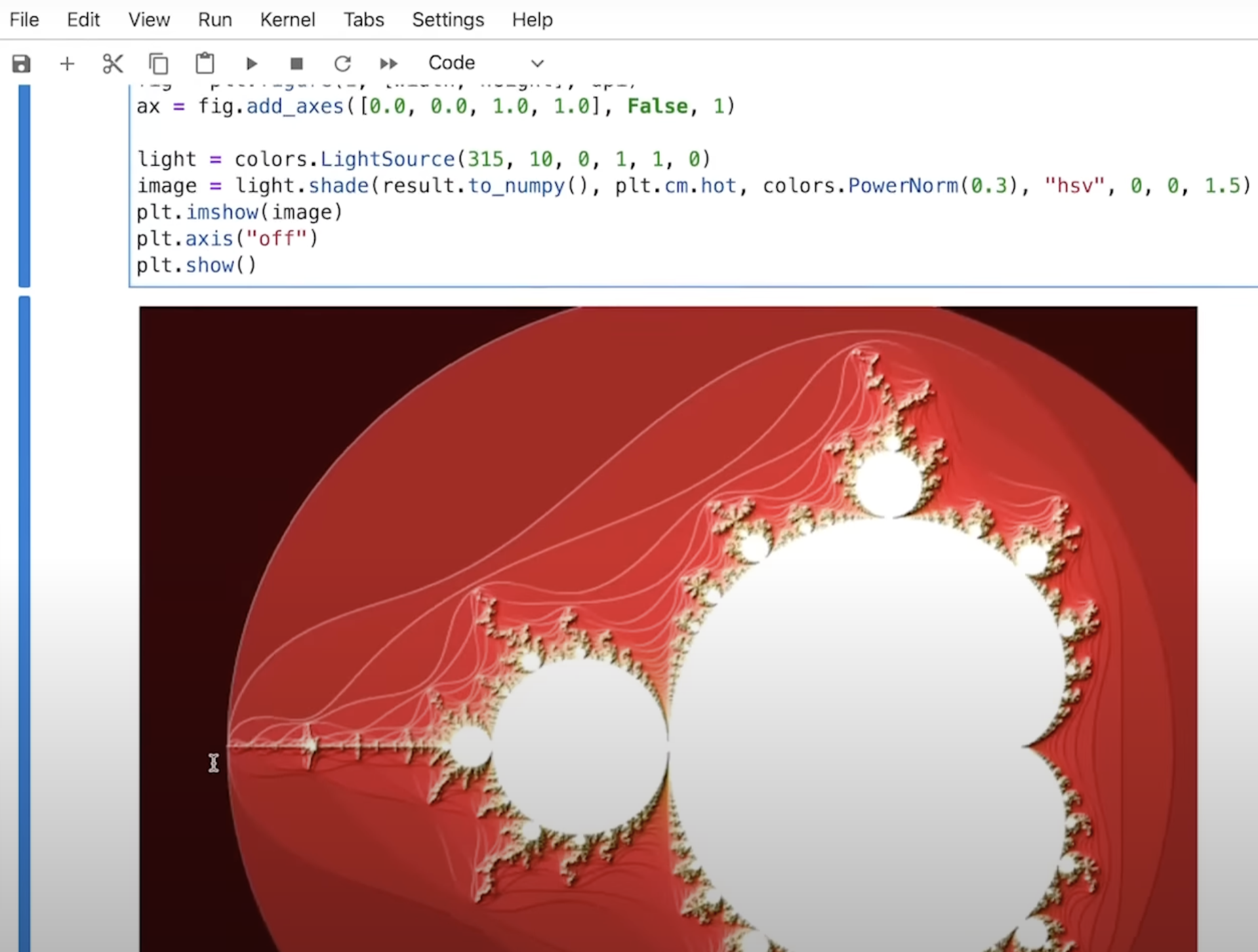

Neither of these items are true. The demo, actually, was created in just some days earlier than I recorded the video. The 2 examples we gave (matmul and mandelbrot) weren’t fastidiously chosen as being the one issues that occurred to work after making an attempt dozens of approaches; moderately, they have been the one issues we tried for the demo they usually labored first time! While there’s loads of lacking options at this early stage (Mojo isn’t even launched to the general public but, apart from a web-based “playground”), the demo you see actually does work the best way you see it. And certainly you can run it your self now within the playground.

Modular is a reasonably small startup that’s solely a yr previous, and just one a part of the corporate is engaged on the Mojo language. Mojo improvement was solely began not too long ago. It’s a small workforce, working for a short while, so how have they accomplished a lot?

The hot button is that Mojo builds on some actually highly effective foundations. Only a few software program tasks I’ve seen spend sufficient time constructing the correct foundations, and have a tendency to accrue because of this mounds of technical debt. Over time, it turns into more durable and more durable so as to add options and repair bugs. In a properly designed system, nevertheless, each function is simpler so as to add than the final one, is quicker, and has fewer bugs, as a result of the foundations every function builds upon are getting higher and higher. Mojo is a properly designed system.

At its core is MLIR, which has already been developed for a few years, initially kicked off by Chris Lattner at Google. He had recognised what the core foundations for an “AI period programming language” would want, and targeted on constructing them. MLIR was a key piece. Simply as LLVM made it dramatically simpler for highly effective new programming languages to be developed over the past decade (equivalent to Rust, Julia, and Swift, that are all based mostly on LLVM), MLIR gives an much more highly effective core to languages which might be constructed on it.

One other key enabler of Mojo’s speedy improvement is the choice to make use of Python because the syntax. Growing and iterating on syntax is among the most error-prone, advanced, and controversial elements of the event of a language. By merely outsourcing that to an present language (which additionally occurs to be the most generally used language as we speak) that complete piece disappears! The comparatively small variety of new bits of syntax wanted on prime of Python then largely match fairly naturally, because the base is already in place.

The subsequent step was to create a minimal Pythonic method to name MLIR instantly. That wasn’t a giant job in any respect, nevertheless it was all that was wanted to then create all of Mojo on prime of that – and work instantly in Mojo for every thing else. That meant that the Mojo devs have been capable of “dog-food” Mojo when writing Mojo, almost from the very begin. Any time they discovered one thing didn’t fairly work nice as they developed Mojo, they might add a wanted function to Mojo itself to make it simpler for them to develop the following little bit of Mojo!

That is similar to Julia, which was developed on a minimal LISP-like core that gives the Julia language parts, that are then sure to primary LLVM operations. Almost every thing in Julia is constructed on prime of that, utilizing Julia itself.

I can’t start to explain all of the little (and large!) concepts all through Mojo’s design and implementation – it’s the results of Chris and his workforce’s a long time of labor on compiler and language design and contains all of the methods and hard-won expertise from that point – however what I can describe is an incredible consequence that I noticed with my very own eyes.

The Modular workforce internally introduced that they’d determined to launch Mojo with a video, together with a demo – they usually set a date just some weeks sooner or later. However at the moment Mojo was simply probably the most bare-bones language. There was no usable pocket book kernel, hardly any of the Python syntax was applied, and nothing was optimised. I couldn’t perceive how they hoped to implement all this in a matter of weeks – not to mention to make it any good! What I noticed over this time was astonishing. Each day or two complete new language options have been applied, and as quickly as there was sufficient in place to attempt operating algorithms, usually they’d be at or close to state-of-the-art efficiency instantly! I realised that what was occurring was that every one the foundations have been already in place, and that they’d been explicitly designed to construct the issues that have been now beneath improvement. So it shouldn’t have been a shock that every thing labored, and labored properly – in any case, that was the plan all alongside!

It is a purpose to be optimistic about the way forward for Mojo. Though it’s nonetheless early days for this challenge, my guess, based mostly on what I’ve noticed in the previous couple of weeks, is that it’s going to develop sooner and additional than most of us anticipate…

Deployment

I’ve left one of many bits I’m most enthusiastic about to final: deployment. At present, if you wish to give your cool Python program to a pal, then you definately’re going to have to inform them to first set up Python! Or, you may give them an unlimited file that features the whole thing of Python and the libraries you employ all packaged up collectively, which can be extracted and loaded after they run your program.

As a result of Python is an interpreted language, how your program will behave will depend upon the precise model of python that’s put in, what variations of what libraries are current, and the way it’s all been configured. With the intention to keep away from this upkeep nightmare, as a substitute the Python neighborhood has settled on a few choices for putting in Python functions: environments, which have a separate Python set up for every program; or containers, which have a lot of a complete working system arrange for every utility. Each approaches result in plenty of confusion and overhead in creating and deploying Python functions.

Examine this to deploying a statically compiled C utility: you may actually simply make the compiled program out there for direct obtain. It may be simply 100k or so in measurement, and can launch and run shortly.

There’s additionally the strategy taken by Go, which isn’t capable of generate small functions like C, however as a substitute incorporates a “runtime” into every packaged utility. This strategy is a compromise between Python and C, nonetheless requiring tens of megabytes for a binary, however offering for simpler deployment than Python.

As a compiled language, Mojo’s deployment story is mainly the identical as C. As an example, a program that features a model of matmul written from scratch is round 100k.

Which means Mojo is much more than a language for AI/ML functions. It’s truly a model of Python that permits us to jot down quick, small, easily-deployed functions that make the most of all out there cores and accelerators!

Alternate options to Mojo

Mojo just isn’t the one try at fixing the Python efficiency and deployment downside. By way of languages, Julia is maybe the strongest present various. It has most of the advantages of Mojo, and plenty of nice tasks are already constructed with it. The Julia of us have been sort sufficient to ask me to offer a keynote at their latest convention, and I used that chance to explain what I felt have been the present shortcomings (and alternatives) for Julia:

As mentioned on this video, Julia’s greatest problem stems from its massive runtime, which in flip stems from the choice to make use of rubbish assortment within the language. Additionally, the multi-dispatch strategy utilized in Julia is a reasonably uncommon selection, which each opens plenty of doorways to do cool stuff within the language, but additionally could make issues fairly difficult for devs. (I’m so enthused by this strategy that I constructed a python model of it – however I’m additionally because of this notably conscious of its limitations!)

In Python, probably the most distinguished present resolution might be Jax, which successfully creates a area particular language (DSL) utilizing Python. The output of this language is XLA, which is a machine studying compiler that predates MLIR (and is step by step being ported over to MLIR, I consider). Jax inherits the constraints of each Python (e.g the language has no means of representing structs, or allocating reminiscence instantly, or creating quick loops) and XLA (which is essentially restricted to machine studying particular ideas and is primarily focused to TPUs), however has the large upside that it doesn’t require a brand new language or new compiler.

As beforehand mentioned, there’s additionally the brand new PyTorch compiler, and likewise Tensorflow is ready to generate XLA code. Personally, I discover utilizing Python on this means finally unsatisfying. I don’t truly get to make use of all the facility of Python, however have to make use of a subset that’s suitable with the backend I’m concentrating on. I can’t simply debug and profile the compiled code, and there’s a lot “magic” occurring that it’s exhausting to even know what truly finally ends up getting executed. I don’t even find yourself with a standalone binary, however as a substitute have to make use of particular runtimes and take care of advanced APIs. (I’m not alone right here – everybody I do know that has used PyTorch or Tensorflow for concentrating on edge gadgets or optimised serving infrastructure has described it as being probably the most advanced and irritating duties they’ve tried! And I’m undecided I even know anybody that’s truly accomplished both of these items utilizing Jax.)

One other attention-grabbing course for Python is Numba and Cython. I’m a giant fan of those tasks and have used each in my educating and software program improvement. Numba makes use of a particular decorator to trigger a python operate to be compiled into optimised machine code utilizing LLVM. Cython is analogous, but additionally gives a Python-like language which has among the options of Mojo, and converts this Python dialect into C, which is then compiled. Neither language solves the deployment problem, however they might help loads with the efficiency downside.

Neither is ready to goal a spread of accelerators with generic cross-platform code, though Numba does present a really helpful method to write CUDA code (and so permits NVIDIA GPUs to be focused).

I’m actually grateful Numba and Cython exist, and have personally gotten loads out of them. Nonetheless they’re under no circumstances the identical as utilizing an entire language and compiler that generates standalone binaries. They’re bandaid options for Python’s efficiency issues, and are nice for conditions the place that’s all you want.

However I’d a lot desire to make use of a language that’s as elegant as Python and as quick as expert-written C, permits me to make use of one language to jot down every thing from the applying server, to the mannequin structure and the installer too, and lets me debug and profile my code instantly within the language through which I wrote it.

How would you want a language like that?

[ad_2]