[ad_1]

Deepgram has made a reputation for itself as one of many go-to startups for voice recognition. At this time, the well-funded firm introduced the launch of Aura, its new real-time text-to-speech API. Aura combines extremely lifelike voice fashions with a low-latency API to permit builders to construct real-time, conversational AI brokers. Backed by giant language fashions (LLMs), these brokers can then stand in for customer support brokers in name facilities and different customer-facing conditions.

As Deepgram co-founder and CEO Scott Stephenson informed me, it’s lengthy been potential to get entry to nice voice fashions, however these have been costly and took a very long time to compute. In the meantime, low latency fashions are inclined to sound robotic. Deepgram’s Aura combines human-like voice fashions that render extraordinarily quick (sometimes in properly below half a second) and, as Stephenson famous repeatedly, does so at a low worth.

“All people now’s like: ‘hey, we’d like real-time voice AI bots that may understand what’s being stated and that may perceive and generate a response — after which they’ll communicate again,’” he stated. In his view, it takes a mixture of accuracy (which he described as desk stakes for a service like this), low latency and acceptable prices to make a product like this worthwhile for companies, particularly when mixed with the comparatively excessive value of accessing LLMs.

Deepgram argues that Aura’s pricing presently beats nearly all its opponents at $0.015 per 1,000 characters. That’s not all that far off Google’s pricing for its WaveNet voices at 0.016 per 1,000 characters and Amazon’s Polly’s Neural voices on the similar $0.016 per 1,000 characters, however — granted — it’s cheaper. Amazon’s highest tier, although, is considerably costlier.

“It’s a must to hit a very good worth level throughout all [segments], however then it’s a must to even have wonderful latencies, velocity — after which wonderful accuracy as properly. So it’s a very onerous factor to hit,” Stephenson stated about Deepgram normal method to constructing its product. “However that is what we centered on from the start and for this reason we constructed for 4 years earlier than we launched something as a result of we have been constructing the underlying infrastructure to make that actual.”

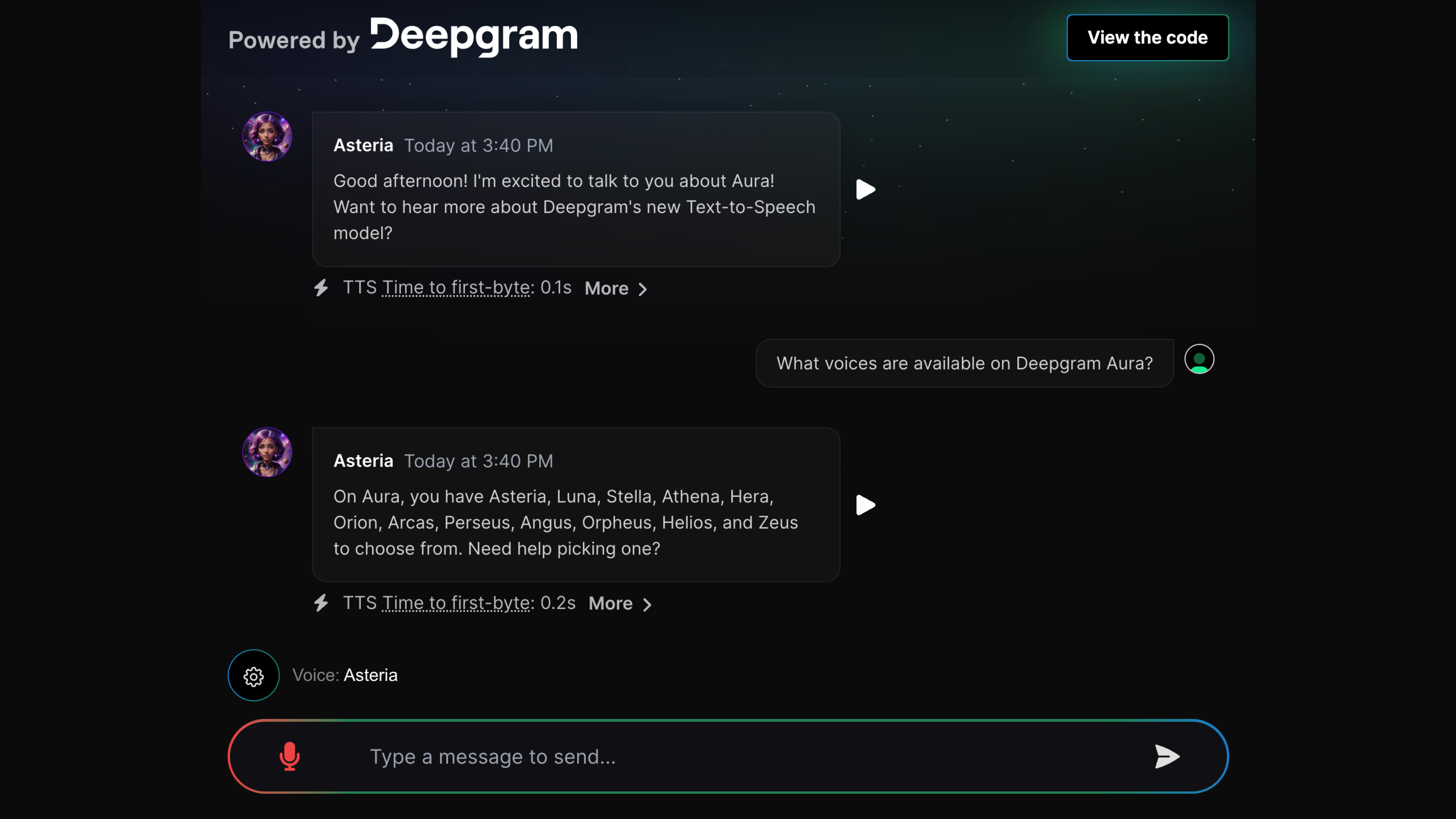

Aura affords round a dozen voice fashions at this level, all of which have been skilled by a dataset Deepgram created along with voice actors. The Aura mannequin, identical to all the firm’s different fashions, have been skilled in-house. Here’s what that appears like:

You may strive a demo of Aura right here. I’ve been testing it for a bit and despite the fact that you’ll generally come throughout some odd pronunciations, the velocity is basically what stands out, along with Deepgram’s present high-quality speech-to-text mannequin. To spotlight the velocity at which it generates responses, Deepgram notes the time it took the mannequin to begin talking (usually lower than 0.3 seconds) and the way lengthy it took the LLM to complete producing its response (which is usually just below a second).

[ad_2]