[ad_1]

Introduction

On this publish we are going to use Keras to categorise duplicated questions from Quora.

The dataset first appeared within the Kaggle competitors Quora Query Pairs and consists of roughly 400,000 pairs of questions together with a column indicating if the query pair is taken into account a replica.

Our implementation is impressed by the Siamese Recurrent Structure, with modifications to the similarity

measure and the embedding layers (the unique paper makes use of pre-trained phrase vectors). Utilizing this type

of structure dates again to 2005 with Le Cun et al and is beneficial for

verification duties. The thought is to be taught a perform that maps enter patterns right into a

goal house such {that a} similarity measure within the goal house approximates

the “semantic” distance within the enter house.

After the competitors, Quora additionally described their method to this downside on this weblog publish.

Dowloading knowledge

Knowledge could be downloaded from the Kaggle dataset webpage

or from Quora’s launch of the dataset:

We’re utilizing the Keras get_file() perform in order that the file obtain is cached.

Studying and preprocessing

We’ll first load knowledge into R and do some preprocessing to make it simpler to

embrace within the mannequin. After downloading the information, you possibly can learn it

utilizing the readr read_tsv() perform.

We’ll create a Keras tokenizer to remodel every phrase into an integer

token. We can even specify a hyperparameter of our mannequin: the vocabulary dimension.

For now let’s use the 50,000 most typical phrases (we’ll tune this parameter later).

The tokenizer might be match utilizing all distinctive questions from the dataset.

tokenizer <- text_tokenizer(num_words = 50000)

tokenizer %>% fit_text_tokenizer(distinctive(c(df$question1, df$question2)))Let’s save the tokenizer to disk as a way to use it for inference later.

save_text_tokenizer(tokenizer, "tokenizer-question-pairs")We’ll now use the textual content tokenizer to remodel every query into a listing

of integers.

question1 <- texts_to_sequences(tokenizer, df$question1)

question2 <- texts_to_sequences(tokenizer, df$question2)Let’s check out the variety of phrases in every query. This may helps us to

resolve the padding size, one other hyperparameter of our mannequin. Padding the sequences normalizes them to the identical dimension in order that we are able to feed them to the Keras mannequin.

80% 90% 95% 99%

14 18 23 31 We will see that 99% of questions have at most size 31 so we’ll select a padding

size between 15 and 30. Let’s begin with 20 (we’ll additionally tune this parameter later).

The default padding worth is 0, however we’re already utilizing this worth for phrases that

don’t seem throughout the 50,000 most frequent, so we’ll use 50,001 as an alternative.

question1_padded <- pad_sequences(question1, maxlen = 20, worth = 50000 + 1)

question2_padded <- pad_sequences(question2, maxlen = 20, worth = 50000 + 1)Now we have now completed the preprocessing steps. We’ll now run a easy benchmark

mannequin earlier than shifting on to the Keras mannequin.

Easy benchmark

Earlier than creating an advanced mannequin let’s take a easy method.

Let’s create two predictors: proportion of phrases from question1 that

seem within the question2 and vice-versa. Then we are going to use a logistic

regression to foretell if the questions are duplicate.

perc_words_question1 <- map2_dbl(question1, question2, ~imply(.x %in% .y))

perc_words_question2 <- map2_dbl(question2, question1, ~imply(.x %in% .y))

df_model <- knowledge.body(

perc_words_question1 = perc_words_question1,

perc_words_question2 = perc_words_question2,

is_duplicate = df$is_duplicate

) %>%

na.omit()Now that we now have our predictors, let’s create the logistic mannequin.

We’ll take a small pattern for validation.

val_sample <- pattern.int(nrow(df_model), 0.1*nrow(df_model))

logistic_regression <- glm(

is_duplicate ~ perc_words_question1 + perc_words_question2,

household = "binomial",

knowledge = df_model[-val_sample,]

)

abstract(logistic_regression)Name:

glm(components = is_duplicate ~ perc_words_question1 + perc_words_question2,

household = "binomial", knowledge = df_model[-val_sample, ])

Deviance Residuals:

Min 1Q Median 3Q Max

-1.5938 -0.9097 -0.6106 1.1452 2.0292

Coefficients:

Estimate Std. Error z worth Pr(>|z|)

(Intercept) -2.259007 0.009668 -233.66 <2e-16 ***

perc_words_question1 1.517990 0.023038 65.89 <2e-16 ***

perc_words_question2 1.681410 0.022795 73.76 <2e-16 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

(Dispersion parameter for binomial household taken to be 1)

Null deviance: 479158 on 363843 levels of freedom

Residual deviance: 431627 on 363841 levels of freedom

(17 observations deleted because of missingness)

AIC: 431633

Variety of Fisher Scoring iterations: 3Let’s calculate the accuracy on our validation set.

[1] 0.6573577We acquired an accuracy of 65.7%. Not all that a lot better than random guessing.

Now let’s create our mannequin in Keras.

Mannequin definition

We’ll use a Siamese community to foretell whether or not the pairs are duplicated or not.

The thought is to create a mannequin that may embed the questions (sequence of phrases)

right into a vector. Then we are able to evaluate the vectors for every query utilizing a similarity

measure and inform if the questions are duplicated or not.

First let’s outline the inputs for the mannequin.

Then let’s the outline the a part of the mannequin that may embed the questions in a

vector.

word_embedder <- layer_embedding(

input_dim = 50000 + 2, # vocab dimension + UNK token + padding worth

output_dim = 128, # hyperparameter - embedding dimension

input_length = 20, # padding dimension,

embeddings_regularizer = regularizer_l2(0.0001) # hyperparameter - regularization

)

seq_embedder <- layer_lstm(

items = 128, # hyperparameter -- sequence embedding dimension

kernel_regularizer = regularizer_l2(0.0001) # hyperparameter - regularization

)Now we are going to outline the connection between the enter vectors and the embeddings

layers. Notice that we use the identical layers and weights on each inputs. That’s why

that is known as a Siamese community. It is sensible, as a result of we don’t wish to have totally different

outputs if question1 is switched with question2.

We then outline the similarity measure we wish to optimize. We would like duplicated questions

to have greater values of similarity. On this instance we’ll use the cosine similarity,

however any similarity measure may very well be used. Do not forget that the cosine similarity is the

normalized dot product of the vectors, however for coaching it’s not essential to

normalize the outcomes.

cosine_similarity <- layer_dot(record(vector1, vector2), axes = 1)Subsequent, we outline a ultimate sigmoid layer to output the chance of each questions

being duplicated.

output <- cosine_similarity %>%

layer_dense(items = 1, activation = "sigmoid")Now that permit’s outline the Keras mannequin when it comes to it’s inputs and outputs and

compile it. Within the compilation section we outline our loss perform and optimizer.

Like within the Kaggle problem, we are going to decrease the logloss (equal

to minimizing the binary crossentropy). We’ll use the Adam optimizer.

We will then check out out mannequin with the abstract perform.

_______________________________________________________________________________________

Layer (sort) Output Form Param # Related to

=======================================================================================

input_question1 (InputLayer (None, 20) 0

_______________________________________________________________________________________

input_question2 (InputLayer (None, 20) 0

_______________________________________________________________________________________

embedding_1 (Embedding) (None, 20, 128) 6400256 input_question1[0][0]

input_question2[0][0]

_______________________________________________________________________________________

lstm_1 (LSTM) (None, 128) 131584 embedding_1[0][0]

embedding_1[1][0]

_______________________________________________________________________________________

dot_1 (Dot) (None, 1) 0 lstm_1[0][0]

lstm_1[1][0]

_______________________________________________________________________________________

dense_1 (Dense) (None, 1) 2 dot_1[0][0]

=======================================================================================

Complete params: 6,531,842

Trainable params: 6,531,842

Non-trainable params: 0

_______________________________________________________________________________________Mannequin becoming

Now we are going to match and tune our mannequin. Nevertheless earlier than continuing let’s take a pattern for validation.

set.seed(1817328)

val_sample <- pattern.int(nrow(question1_padded), dimension = 0.1*nrow(question1_padded))

train_question1_padded <- question1_padded[-val_sample,]

train_question2_padded <- question2_padded[-val_sample,]

train_is_duplicate <- df$is_duplicate[-val_sample]

val_question1_padded <- question1_padded[val_sample,]

val_question2_padded <- question2_padded[val_sample,]

val_is_duplicate <- df$is_duplicate[val_sample]Now we use the match() perform to coach the mannequin:

Practice on 363861 samples, validate on 40429 samples

Epoch 1/10

363861/363861 [==============================] - 89s 245us/step - loss: 0.5860 - acc: 0.7248 - val_loss: 0.5590 - val_acc: 0.7449

Epoch 2/10

363861/363861 [==============================] - 88s 243us/step - loss: 0.5528 - acc: 0.7461 - val_loss: 0.5472 - val_acc: 0.7510

Epoch 3/10

363861/363861 [==============================] - 88s 242us/step - loss: 0.5428 - acc: 0.7536 - val_loss: 0.5439 - val_acc: 0.7515

Epoch 4/10

363861/363861 [==============================] - 88s 242us/step - loss: 0.5353 - acc: 0.7595 - val_loss: 0.5358 - val_acc: 0.7590

Epoch 5/10

363861/363861 [==============================] - 88s 242us/step - loss: 0.5299 - acc: 0.7633 - val_loss: 0.5358 - val_acc: 0.7592

Epoch 6/10

363861/363861 [==============================] - 88s 242us/step - loss: 0.5256 - acc: 0.7662 - val_loss: 0.5309 - val_acc: 0.7631

Epoch 7/10

363861/363861 [==============================] - 88s 242us/step - loss: 0.5211 - acc: 0.7701 - val_loss: 0.5349 - val_acc: 0.7586

Epoch 8/10

363861/363861 [==============================] - 88s 242us/step - loss: 0.5173 - acc: 0.7733 - val_loss: 0.5278 - val_acc: 0.7667

Epoch 9/10

363861/363861 [==============================] - 88s 242us/step - loss: 0.5138 - acc: 0.7762 - val_loss: 0.5292 - val_acc: 0.7667

Epoch 10/10

363861/363861 [==============================] - 88s 242us/step - loss: 0.5092 - acc: 0.7794 - val_loss: 0.5313 - val_acc: 0.7654After coaching completes, we are able to save our mannequin for inference with the save_model_hdf5()

perform.

save_model_hdf5(mannequin, "model-question-pairs.hdf5")Mannequin tuning

Now that we now have an affordable mannequin, let’s tune the hyperparameters utilizing the

tfruns bundle. We’ll start by including FLAGS declarations to our script for all hyperparameters we wish to tune (FLAGS enable us to range hyperparmaeters with out altering our supply code):

FLAGS <- flags(

flag_integer("vocab_size", 50000),

flag_integer("max_len_padding", 20),

flag_integer("embedding_size", 256),

flag_numeric("regularization", 0.0001),

flag_integer("seq_embedding_size", 512)

)With this FLAGS definition we are able to now write our code when it comes to the flags. For instance:

input1 <- layer_input(form = c(FLAGS$max_len_padding))

input2 <- layer_input(form = c(FLAGS$max_len_padding))

embedding <- layer_embedding(

input_dim = FLAGS$vocab_size + 2,

output_dim = FLAGS$embedding_size,

input_length = FLAGS$max_len_padding,

embeddings_regularizer = regularizer_l2(l = FLAGS$regularization)

)The complete supply code of the script with FLAGS could be discovered right here.

We moreover added an early stopping callback within the coaching step as a way to cease coaching

if validation loss doesn’t lower for five epochs in a row. This may hopefully scale back coaching time for dangerous fashions. We additionally added a studying charge reducer to scale back the educational charge by an element of 10 when the loss doesn’t lower for 3 epochs (this system usually will increase mannequin accuracy).

We will now execute a tuning run to probe for the optimum mixture of hyperparameters. We name the tuning_run() perform, passing a listing with

the attainable values for every flag. The tuning_run() perform might be accountable for executing the script for all combos of hyperparameters. We additionally specify

the pattern parameter to coach the mannequin for under a random pattern from all combos (decreasing coaching time considerably).

library(tfruns)

runs <- tuning_run(

"question-pairs.R",

flags = record(

vocab_size = c(30000, 40000, 50000, 60000),

max_len_padding = c(15, 20, 25),

embedding_size = c(64, 128, 256),

regularization = c(0.00001, 0.0001, 0.001),

seq_embedding_size = c(128, 256, 512)

),

runs_dir = "tuning",

pattern = 0.2

)The tuning run will return a knowledge.body with outcomes for all runs.

We discovered that the most effective run attained 84.9% accuracy utilizing the mix of hyperparameters proven beneath, so we modify our coaching script to make use of these values because the defaults:

FLAGS <- flags(

flag_integer("vocab_size", 50000),

flag_integer("max_len_padding", 20),

flag_integer("embedding_size", 256),

flag_numeric("regularization", 1e-4),

flag_integer("seq_embedding_size", 512)

)Making predictions

Now that we now have educated and tuned our mannequin we are able to begin making predictions.

At prediction time we are going to load each the textual content tokenizer and the mannequin we saved

to disk earlier.

Since we gained’t proceed coaching the mannequin, we specified the compile = FALSE argument.

Now let`s outline a perform to create predictions. On this perform we we preprocess the enter knowledge in the identical manner we preprocessed the coaching knowledge:

predict_question_pairs <- perform(mannequin, tokenizer, q1, q2) {

q1 <- texts_to_sequences(tokenizer, record(q1))

q2 <- texts_to_sequences(tokenizer, record(q2))

q1 <- pad_sequences(q1, 20)

q2 <- pad_sequences(q2, 20)

as.numeric(predict(mannequin, record(q1, q2)))

}We will now name it with new pairs of questions, for instance:

predict_question_pairs(

mannequin,

tokenizer,

"What's R programming?",

"What's R in programming?"

)[1] 0.9784008Prediction is sort of quick (~40 milliseconds).

Deploying the mannequin

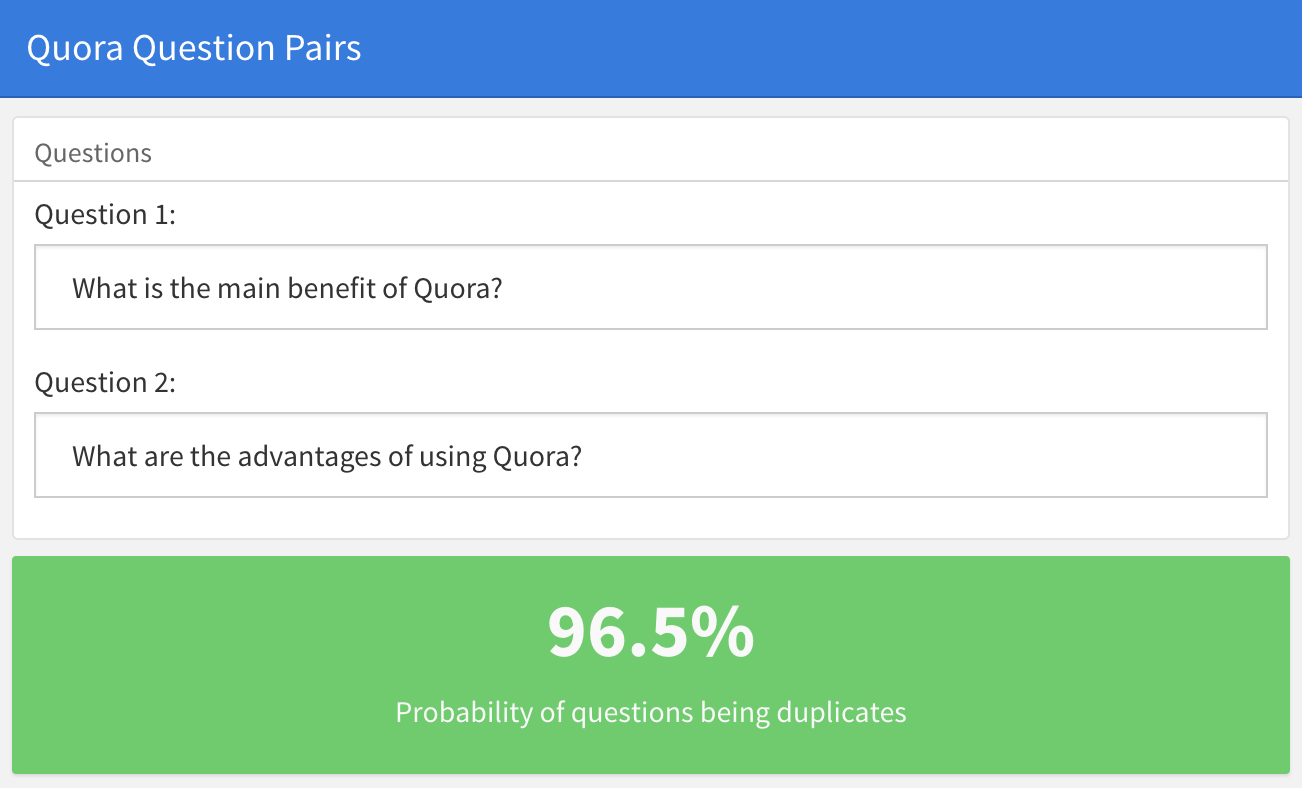

To reveal deployment of the educated mannequin, we created a easy Shiny utility, the place

you possibly can paste 2 questions from Quora and discover the chance of them being duplicated. Attempt altering the questions beneath or getting into two solely totally different questions.

The shiny utility could be discovered at https://jjallaire.shinyapps.io/shiny-quora/ and it’s supply code at https://github.com/dfalbel/shiny-quora-question-pairs.

Notice that when deploying a Keras mannequin you solely must load the beforehand saved mannequin file and tokenizer (no coaching knowledge or mannequin coaching steps are required).

Wrapping up

- We educated a Siamese LSTM that provides us affordable accuracy (84%). Quora’s cutting-edge is 87%.

- We will enhance our mannequin by utilizing pre-trained phrase embeddings on bigger datasets. For instance, strive utilizing what’s described in this instance. Quora makes use of their very own full corpus to coach the phrase embeddings.

- After coaching we deployed our mannequin as a Shiny utility which given two Quora questions calculates the chance of their being duplicates.

[ad_2]