[ad_1]

Abstract: not too long ago whereas fine-tuning a big language mannequin (LLM) on multiple-choice science examination questions, we noticed some extremely uncommon coaching loss curves. Particularly, it appeared the mannequin was capable of quickly memorize examples from the dataset after seeing them simply as soon as. This astonishing feat contradicts most prior knowledge about neural community pattern effectivity. Intrigued by this consequence, we carried out a collection of experiments to validate and higher perceive this phenomenon. It’s early days, however the experiments help the speculation that the fashions are capable of quickly keep in mind inputs. This may imply we’ve got to re-think how we practice and use LLMs.

How neural networks be taught

We practice neural community classifiers by exhibiting them examples of inputs and outputs, and so they be taught to foretell outputs primarily based on inputs. For instance, we present examples of images of canines and cats, together with the breed of every, and so they be taught to guess the breed from the picture. To be extra exact, for a listing of attainable breeds, they output their guess as to the chance of every breed. If it’s not sure, it should guess a roughly equal chance of every attainable breed, and if it’s extremely assured, it should guess an almost 1.0 chance of its predicted breed.

The coaching course of consists of each picture in a coaching set being proven to the community, together with the proper label. A move by all of the enter information is known as an “epoch”. We’ve got to offer many examples of the coaching information for the mannequin to be taught successfully.

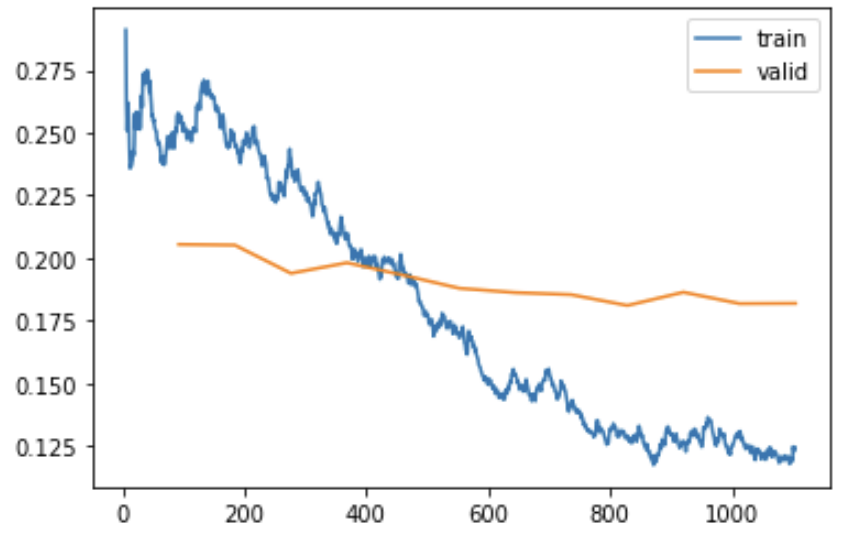

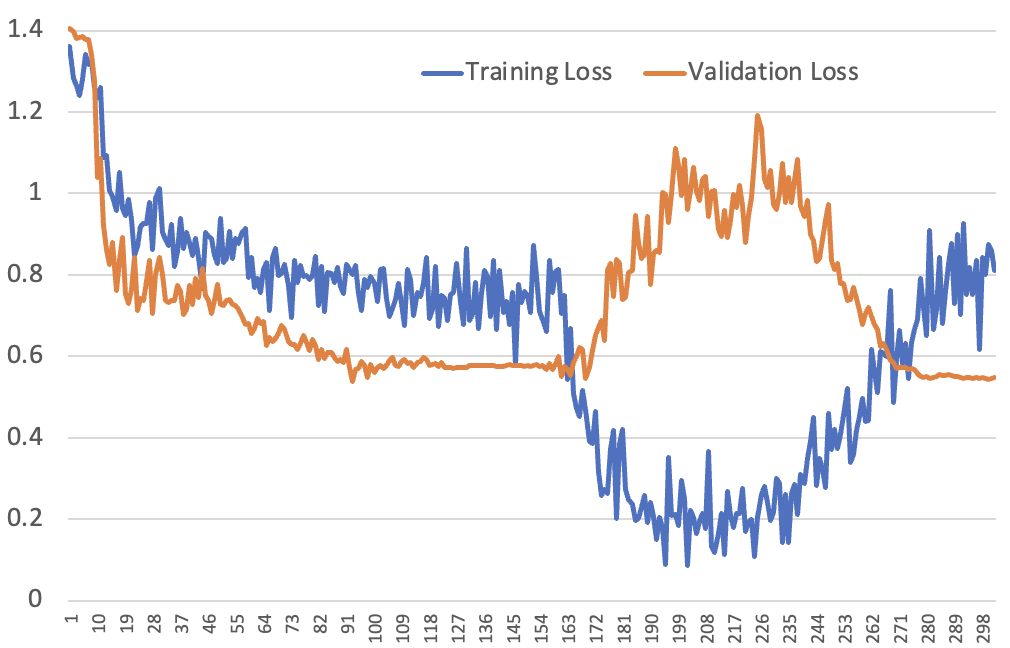

Throughout coaching the neural community makes an attempt to scale back the loss, which is (roughly talking) a measure of how typically the mannequin is flawed, with extremely assured flawed predictions penalised essentially the most, and vise versa. We calculate the loss after every batch for the coaching set, and on occasion (typically on the finish of every epoch) we additionally calculated the loss for a bunch of inputs the mannequin does not get to be taught from – that is the “validation set”. Right here’s what that appears like in apply after we practice for 11 epochs:

As you see, the coaching loss step by step (and bumpily) improves comparatively shortly, slowing down over time, and the validation loss improves extra slowly (and would ultimately flatten out fully, after which ultimately worsen, if skilled for longer).

You may’t see from the chart the place epochs begin and cease, as a result of it takes many epochs earlier than a mannequin learns what any explicit picture seems to be like. This has been a elementary constraint of neural networks all through the a long time they’ve been developed – they take an awfully very long time to be taught something! It’s really an space of lively analysis about why neural nets are so “pattern inefficient”, particularly in comparison with how youngsters be taught.

A really odd loss curve

We’ve got not too long ago been engaged on the Kaggle LLM Science Examination competitors, which “challenges contributors to reply troublesome science-based questions written by a Massive Language Mannequin”. For example, right here’s the primary query:

Which of the next statements precisely describes the impression of Modified Newtonian Dynamics (MOND) on the noticed “lacking baryonic mass” discrepancy in galaxy clusters?

- MOND is a idea that reduces the noticed lacking baryonic mass in galaxy clusters by postulating the existence of a brand new type of matter referred to as “fuzzy darkish matter.”

- MOND is a idea that will increase the discrepancy between the noticed lacking baryonic mass in galaxy clusters and the measured velocity dispersions from an element of round 10 to an element of about 20.

- MOND is a idea that explains the lacking baryonic mass in galaxy clusters that was beforehand thought of darkish matter by demonstrating that the mass is within the type of neutrinos and axions.

- MOND is a idea that reduces the discrepancy between the noticed lacking baryonic mass in galaxy clusters and the measured velocity dispersions from an element of round 10 to an element of about 2.

- MOND is a idea that eliminates the noticed lacking baryonic mass in galaxy clusters by imposing a brand new mathematical formulation of gravity that doesn’t require the existence of darkish matter.

For these taking part in alongside at dwelling, the proper reply, apparently, is D.

Fortunately, we don’t need to depend on our information of Modified Newtonian Dynamics to reply these questions – as a substitute, we’re tasked to coach a mannequin to reply these questions. After we submit our mannequin to Kaggle, it will likely be examined in opposition to hundreds of “held out” questions that we don’t get to see.

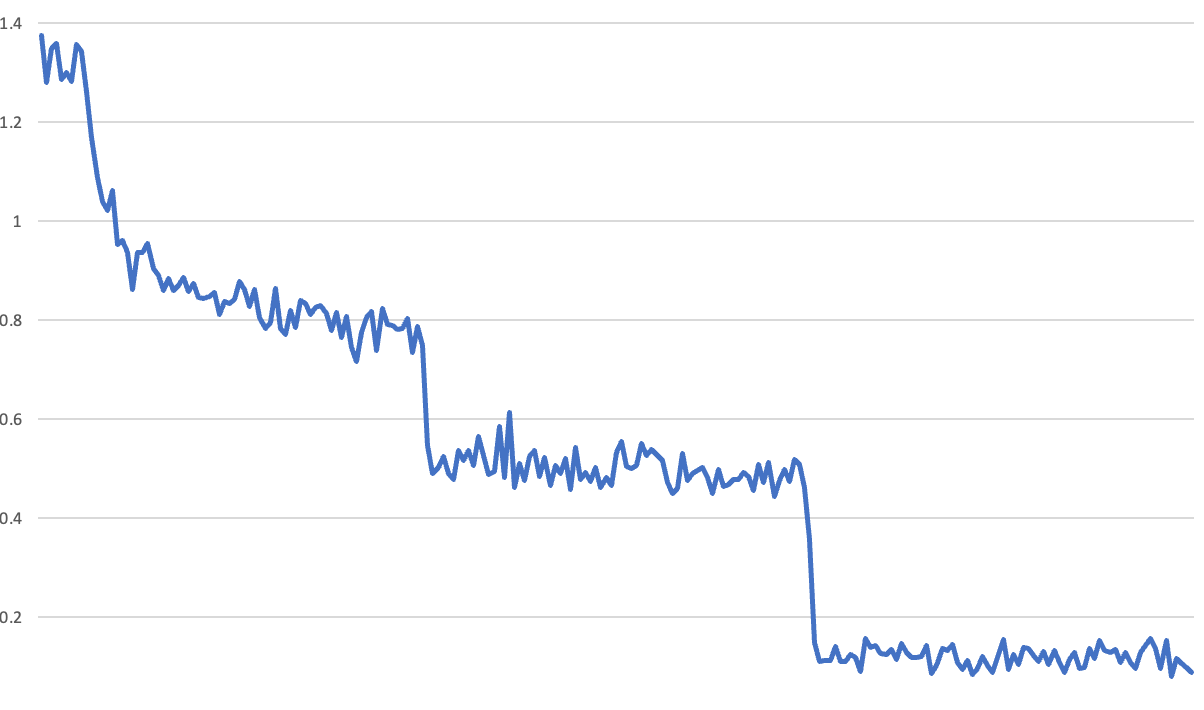

We skilled our mannequin for 3 epochs on a huge dataset of questions created by our buddy Radek Osmulski, and noticed the next most surprising coaching loss curve:

The issue right here is that you may clearly see the tip of every epoch – there’s a sudden downwards soar in loss. We’ve seen comparable loss curves earlier than, and so they’ve at all times been because of a bug. For example, it’s straightforward to unintentionally have the mannequin proceed to be taught when evaluating the validation set – such that after validation the mannequin immediately seems a lot better. So we got down to search for the bug in our coaching course of. We have been utilizing Hugging Face’s Coach, so we guessed there should be a bug in that.

While we started stepping by the code, we additionally requested fellow open supply builders on the Alignment Lab AI Discord in the event that they’ve seen comparable odd coaching curves, and just about everybody stated “sure”. However everybody who responded was utilizing Coach as properly, which appeared to help our idea of a bug in that library.

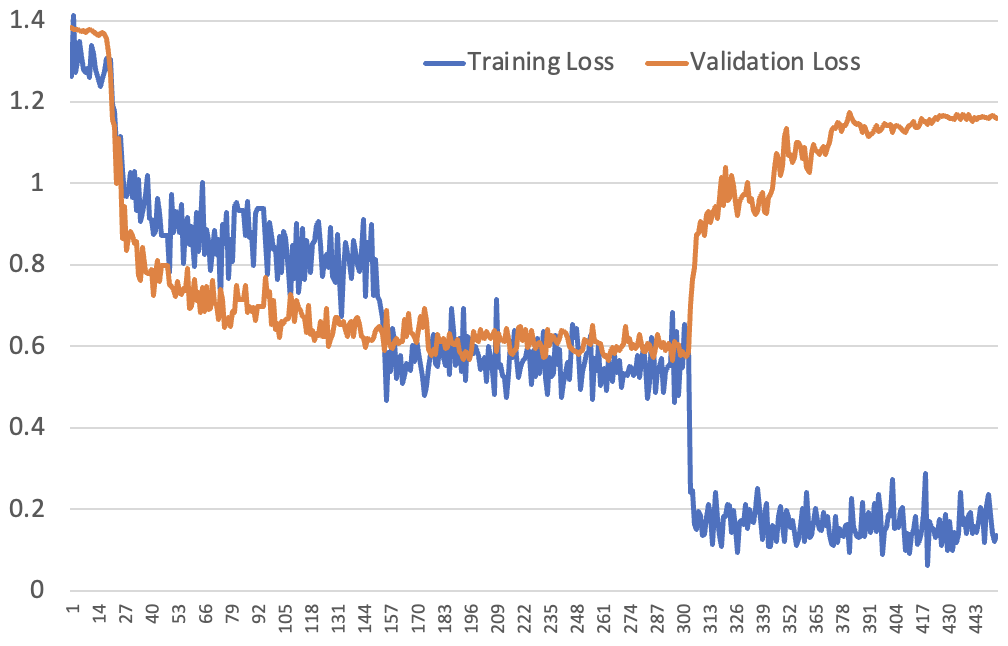

However then @anton on Discord advised us he was seeing this curve along with his personal easy customized coaching loop:

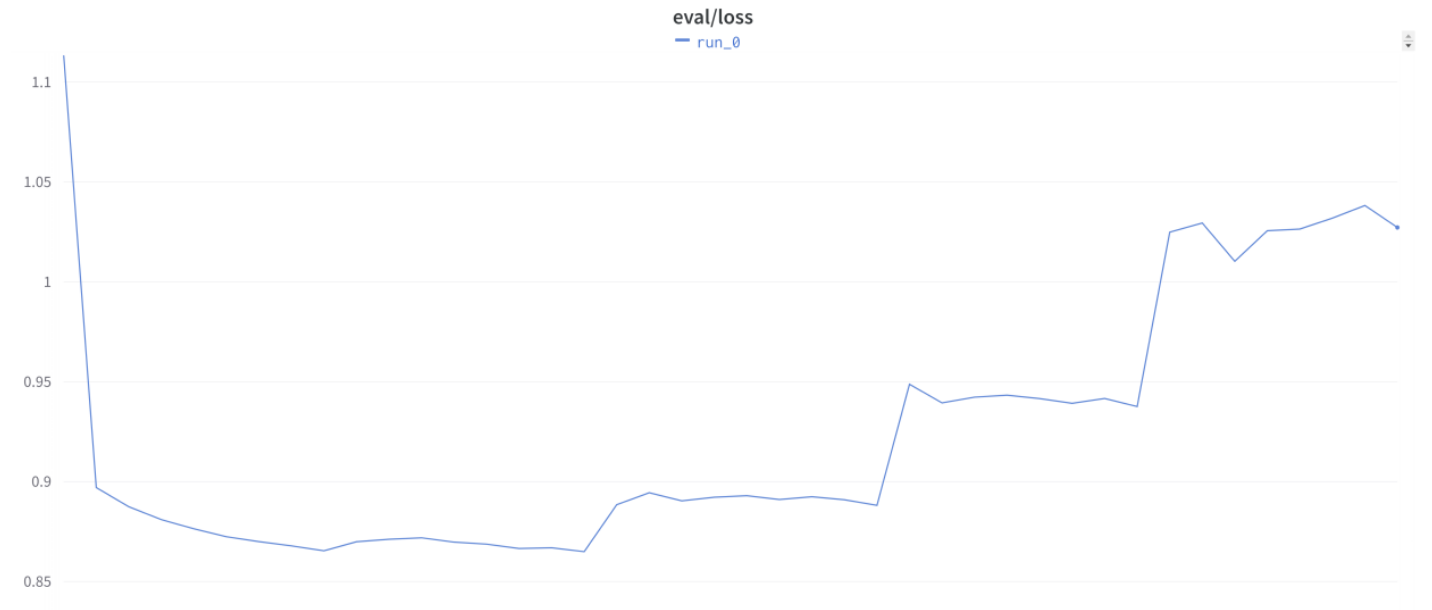

…and he additionally confirmed us this accompanying extraordinarily stunning validation loss curve:

Then we began listening to from increasingly more Discord buddies that that they had seen comparable unusual habits, together with when not utilizing Coach. We puzzled if it was some oddity particular to the LoRA method we have been utilizing, however we heard from people seeing the identical sample when doing full fine-tuning too. In truth, it was mainly frequent information within the LLM fine-tuning group that that is simply how issues go once you’re doing this type of work!…

Digging deeper

The speculation that we stored listening to from open supply colleagues is that that these coaching curves have been really exhibiting overfitting. This appeared, at first, fairly unattainable. It could suggest that the mannequin was studying to recognise inputs from only one or two examples. If you happen to look again at that first curve we confirmed, you’ll be able to see the loss diving from 0.8 to 0.5 after the primary epoch, after which from 0.5 to beneath 0.2 after the second. Moreover, throughout every of the second and third epochs it wasn’t actually studying something new in any respect. So, apart from its preliminary studying throughout the starting of the primary epoch, almost all of the obvious studying was (in accordance with this idea) memorization of the coaching set occurring with solely 3 examples per row! Moreover, for every query, it solely will get a tiny quantity of sign: how its guess as to the reply in comparison with the true label.

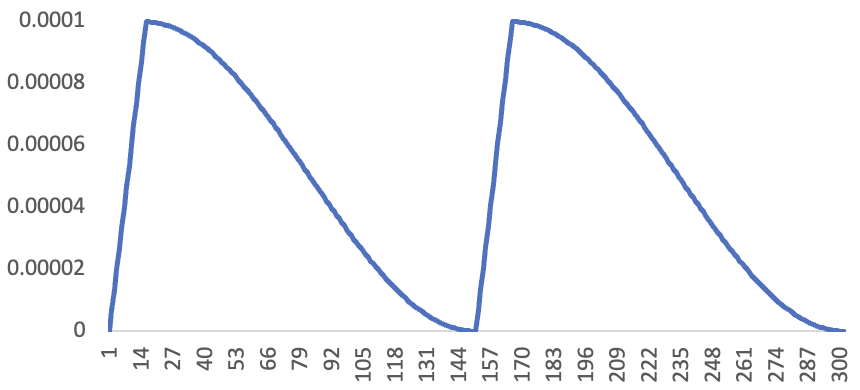

We tried out an experiment – we skilled our Kaggle mannequin for 2 epochs, utilizing the next studying price schedule:

These days this type of schedule shouldn’t be that frequent, nevertheless it’s an method that noticed a whole lot of success after it was created by Leslie Smith, who mentioned it in his 2015 paper Cyclical Studying Charges for Coaching Neural Networks.

And right here’s the crazy-looking coaching and validation loss curves we noticed because of this:

The one factor that we’ve got give you (to date!) that absolutely explains this image is that the speculation is appropriate: the mannequin is quickly studying to recognise examples even simply seeing them as soon as. Let’s work by every a part of the loss curve in flip…

Trying on the first epoch, this seems to be like a really customary loss curve. We’ve got the educational price warming up over the primary 10% of the epoch, after which step by step reducing following a cosine schedule. As soon as the LR comes as much as temperature, the coaching and validation loss quickly lower, after which they each decelerate because the LR decreases and the “fast wins” are captured.

The second epoch is the place it will get . We’re not re-shuffling the dataset at first of the epoch, so these first batches of the second epoch are when the educational price was nonetheless warming up. That’s why we don’t see a right away step-change like we did from epoch 2 to three within the very first loss curve we confirmed – these batches have been solely seen when the LR was low, so it couldn’t be taught a lot.

In the direction of the tip of that first 10% of the epoch, the coaching loss plummets, as a result of the LR was excessive when these batches have been seen throughout the first epoch, and the mannequin has realized what they seem like. The mannequin shortly learns that it could possibly very confidentally guess the proper reply.

However throughout this time, validation loss suffers. That’s as a result of though the mannequin is getting very assured, it’s not really getting any higher at making predictions. It has merely memorised the dataset, however isn’t enhancing at generalizing. Over-confident predictions trigger validation loss to worsen, as a result of the loss perform penalizes extra assured errors larger.

The top of the curve is the place issues get notably fascinating. The coaching loss begins getting worse – and that basically by no means should occur! In truth, neither of us keep in mind ever seeing such a factor earlier than when utilizing an affordable LR.

However really, this makes excellent sense beneath the memorization speculation: these are the batches that the mannequin noticed at a time when the LR had come again down once more, so it wasn’t capable of memorize them as successfully. However the mannequin remains to be over-confident, as a result of it has simply received a complete bunch of batches almost completely appropriate, and hasn’t but adjusted to the truth that it’s now seeing batches that it didn’t have an opportunity to be taught so properly.

It step by step recalibrates to a extra cheap degree of confidence, nevertheless it takes some time, as a result of the LR is getting decrease and decrease. Because it recalibrates, the validation loss comes again down once more.

For our subsequent experiment, we tried 1cycle coaching over 3 epochs, as a substitute of CLR – that’s, we did a single LR warmup for 10% of batches at first of coaching, after which decayed the LR over the remaining batches following a cosine schedule. Beforehand, we did a separate warmup and decay cycle for every epoch. Additionally, we elevated the LoRA rank, leading to slower studying. Right here’s the ensuing loss curve:

The form largely follows what we’d count on, primarily based on the earlier dialogue, aside from one factor: the validation loss doesn’t soar up at epoch 2 – it’s not till epoch 3 that we see that soar. Nonetheless beforehand the coaching loss was round 0.2 by the 2nd epoch, which is just attainable when it’s making extremely assured predictions. Within the 1cycle instance it doesn’t make such assured predictions till the third epoch, and we don’t see the soar in validation loss till that occurs.

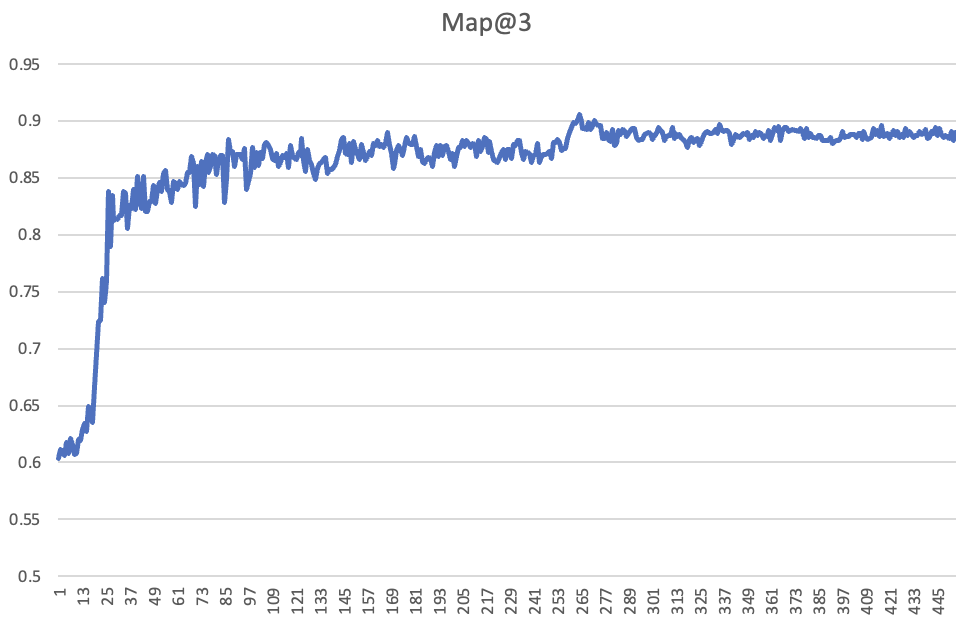

It’s vital to notice that the validation loss getting worse doesn’t imply that we’re over-fitting in apply. What we typically care about is accuracy, and it’s nice if the mannequin is over-confident. Within the Kaggle competitors the metric used for the leaderboard is Imply Common Precision @ 3 (MAP@3), which is the accuracy of the ranked top-3 multiple-choice predictions made my the mannequin. Right here’s the validation accuracy per batch of the 1cycle coaching run proven within the earlier chart – as you see, it retains enhancing, even though the validation loss received worse within the final epoch:

If you happen to’re eager about diving deeper, check out this report the place Johno shares logs from some extra examples, together with a pocket book for many who’d wish to see this impact in motion for themselves.

How may the memorization speculation be true?

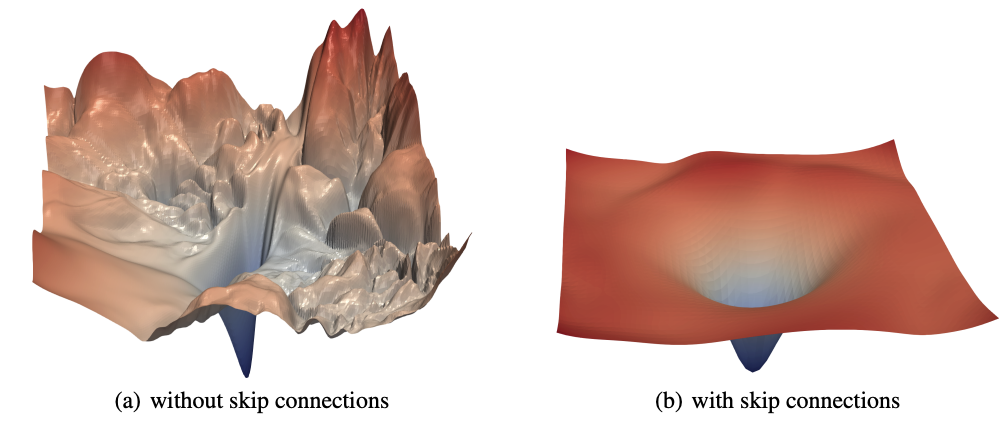

There is no such thing as a elementary legislation that claims that neural networks can’t be taught to recognise inputs from a single instance. It’s simply what researchers and practitioners have typically discovered to be the case in apply. It takes a whole lot of examples as a result of the loss surfaces that we’re attempting to navigate utilizing stochastic gradient descent (SGD) are too bumpy to have the ability to soar far directly. We do know, nonetheless, that some issues could make loss surfaces smoother, corresponding to utilizing residual connections, as proven within the basic Visualizing the Loss Panorama of Neural Nets paper (Li et al, 2018).

It may properly be the case that pre-trained massive language fashions have extraordinarily clean loss surfaces in areas near the minimal loss, and that a whole lot of the fine-tuning work carried out within the open supply group is on this space. That is primarily based on the underlying premise surrounding the unique growth of fine-tuned common language fashions. These fashions have been first documented within the ULMFiT paper again in 2018 by certainly one of us (Jeremy) and Sebastian Ruder. The explanation Jeremy initially constructed the ULMFiT algorithm is as a result of it appeared needed that any mannequin that might do job of language modeling (that’s, predicting the following phrase of a sentence) must construct a wealthy hierarchy of abstractions and capabilities internally. Moreover, Jeremy believed that this hierarchy may then be simply tailored to resolve different duties requiring comparable capabilities utilizing a small quantity of fine-tuning. The ULMFiT paper demonstrated for the primary time that that is certainly precisely what occurs.

Massive language fashions, which at this time are orders of magnitude larger than these studied in ULMFiT, will need to have a fair richer hierarchy of abstractions. So fine-tuning certainly one of these fashions to, for example, reply multiple-choice questions on science, can largely harness capabilities and information that’s already out there within the mannequin. It’s only a case of surfacing the precise items in the precise means. These mustn’t require many weights to be adjusted very a lot.

Based mostly on this, it’s maybe not stunning to suppose {that a} pre-trained language mannequin with a small random classification head might be in part of the burden area the place the loss floor easily and clearly factors precisely within the path of weight configuration. And when utilizing the Adam optimiser (as we did), having a constant and clean gradient ends in efficient dynamic studying price going up and up, such that steps can get very huge.

What now?

Having a mannequin that learns actually quick sounds nice – however really it signifies that a whole lot of primary concepts round how you can practice fashions could also be turned on their head! When fashions practice very slowly, we are able to practice them for a very long time, utilizing all kinds of knowledge, for a number of epochs, and we are able to count on that our mannequin will step by step pull out generalisable data from the info we give it.

However when fashions be taught this quick, the catastrophic forgetting drawback could immediately change into way more pronounced. For example, if a mannequin sees ten examples of a quite common relationship, after which one instance of a much less frequent counter-example, it could properly keep in mind the counter-example as a substitute of simply barely downweighting its reminiscence of the unique ten examples.

It could even be the case now that information augmentation is now much less helpful for avoiding over-fitting. Since LLMs are so efficient at pulling out representations of the data they’re given, mixing issues up by paraphrasing and back-translation could no longer make a lot of a distinction. The mannequin could be successfully getting the identical data both means.

Maybe we are able to mitigate these challenges by vastly growing our use of methods corresponding to dropout (which is already used somewhat in fine-tuning methods corresponding to LoRA) or stochastic depth (which doesn’t appear to have been utilized in NLP to any vital extent but).

Alternatively, perhaps we simply must be cautious to make use of wealthy mixtures of datasets all through coaching, in order that our fashions by no means have an opportunity to neglect. Though Llama Code, for example, did endure from catastrophic forgetting (because it received higher at code, it received a lot worse at the whole lot else), it was fine-tuned with solely 10% of non-code information. Maybe with one thing nearer to a 50/50 combine it will have been attainable to get simply pretty much as good at coding, with out dropping its present capabilities.

If you happen to give you any various hypotheses, and are capable of take a look at them, or for those who discover any empirical proof that the memorization speculation is flawed, please do tell us! We’re additionally eager to listen to about different work on this area (and apologies if we did not reference any prior work right here), and any concepts about how (if in any respect) we must always modify how we practice and use these fashions primarily based on these observations. We’ll be keeping track of replies to this twitter thread, so please reply there in case you have any ideas or questions.

[ad_2]