[ad_1]

AMD is pushing superior packaging and chiplets to the restrict with the launch of its Intuition MI300-series accelerators, a part of a quest to shut the hole with rival Nvidia within the AI area and bolster its standing within the excessive efficiency computing (HPC) group.

When the generative AI hype practice left the station in late 2022 amid the launch of ChatGPT, AMD lacked a aggressive reply. Its MI250X, which powers the number-one-ranked Frontier supercomputer, was stellar at crunching extremely exact FP64 arithmetic. However it got here up quick subsequent to Nvidia’s H100 in decrease precision workloads widespread in AI coaching and inference.

The MI250X was able to hitting simply shy of a 100 teraFLOPS at FP64 however might solely handle 383 teraFLOPS of FP16 or BF16, placing it simply forward of Nvidia’s A100 — in case you ignore sparsity after all. Subsequent to the H100, nonetheless, the MI250X got here up quick. At FP8, Nvidia’s flagship might prime 1,979 teraFLOPS in its SXM type issue and with sparsity its nearer to 4 petaFLOPS of efficiency.

With the launch of the Intuition MI300A APUs and MI300X GPUs this week, AMD goals to rectify this efficiency deficit with modular accelerators tuned for HPC, AI coaching, and inference. And going off AMD’s efficiency claims, it appears prefer it has performed simply that.

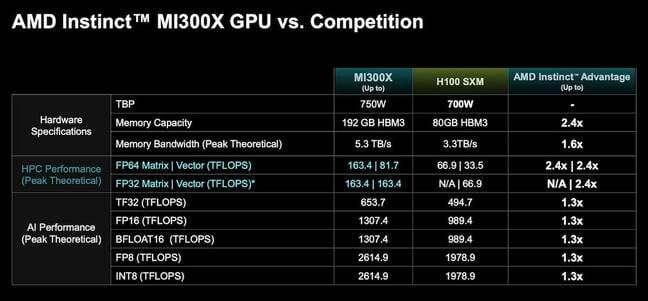

AMD says its MI300X GPUs not solely match however exceed the pace of Nvidia’s H100, with FP8 efficiency of two.6 petaFLOPS. We’re instructed the MI300-series elements comply with a normal 2:1 benefit — the identical we see from Nvidia.

AMD claims the Intuition MI300X will ship as much as 32 % greater efficiency in AI workloads than the Nvidia H100. – Click on to enlarge

When it comes to sheer FLOPS, that makes the MI300X 32 % quicker than Nvidia’s H100. Nevertheless, the MI300-series half’s largest benefit nonetheless comes right down to reminiscence.

The MI300X not solely has extra of it nevertheless it’s additionally quicker, coming in at 5.2TB/s of bandwidth in comparison with 3.35TB/s for the H100. After all, that lead diminishes considerably when you think about the lately introduced H200 will apparently have 141GB of HBM3e at 4.8TB/s of bandwidth — 4.9TB/s within the case of Nvidia’s Grace-Hopper superchip. Nonetheless AMD holds the lead right here.

This interprets into substantial efficiency lead with regards to AI inferencing, in line with AMD, with the MI300X claiming a 1.6x lead over the H100 by way of token throughput within the Bloom 176 billion parameter massive language mannequin and a 1.4x lead by way of latency for Meta’s Llama 2 70B mannequin. In relation to AI coaching, in the meantime, AMD says the MI300X might be “aggressive” with efficiency on par with Nvidia’s H100.

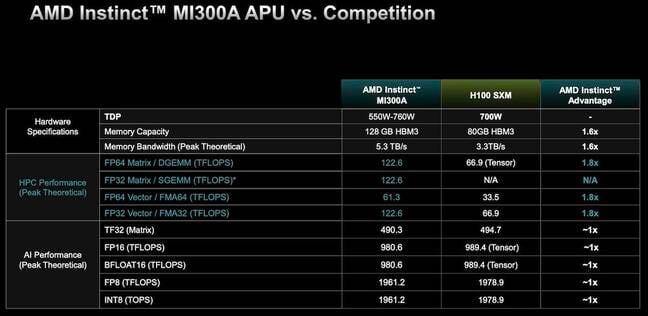

AMD’s Intuition MI300A guarantees as much as 1.8x greater efficiency than the Nvidia H100 in HPC workloads – Click on to enlarge

For HPC workloads, in the meantime, AMD’s MI300A’s mixture of Zen 4 CPU cores and CDNA 3 graphics cores is rated for 122.6 teraFLOPS of double precision efficiency — 1.8x that of the H100. Nevertheless, because of its coherent reminiscence structure, AMD means that clients might see as a lot as a four-fold enchancment in efficiency in comparison with the H100 in benchmarks like OpenFoam Bike.

As with all vendor equipped numbers, we suggest taking AMD’s claims with a grain of salt. Having mentioned that, how AMD managed to squeeze all that efficiency from the chip is simply as essential and arguably the extra attention-grabbing aspect to the launch.

AMD brings Epyc modularity to Intuition

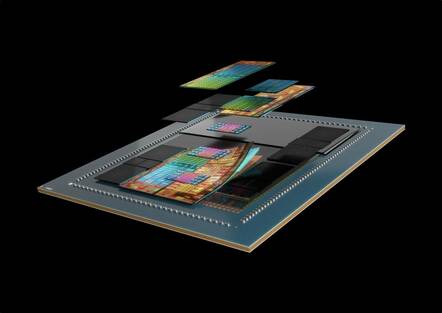

AMD’s MI300-series elements are in contrast to something we have seen from the chip biz earlier than, each by way of packaging and structure.

Contemplating the continuing hype round LLMs and generative AI, we’ll kick issues off with AMD’s new GPU for AI coaching and inference, the MI300X, since it is the easier of the 2.

In comparison with its predecessor, the MI250X, the MI300X is a much more complicated little bit of equipment, sticking collectively 12 I/O and GPU dies into one thing of a silicon sandwich. On the coronary heart of the chip are eight XCDs — what AMD calls its GPU dies.

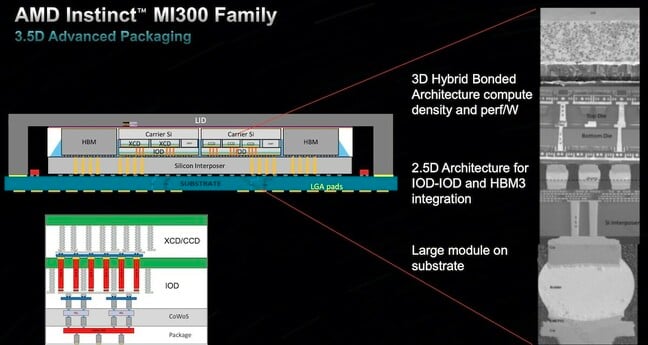

MI300 meshes 24 Zen 4 cores, six CDNA 3 GPU dies and 128GB of HBM3 reminiscence onto a single package deal aimed toward HPC workloads – Click on to enlarge

Every of those chiplets options 40 CDNA 3 compute items backed by 4MB of shared L2 cache, though solely 38 are literally lively. In whole, the MI300X packs 304 CDNA 3 compute items together with 192GB of HBM3 reminiscence right into a single package deal.

This vertical integration is feasible because of various superior 2.5D and three.5D packaging strategies like TSMC’s chip-on-wafer-on-substrate (CoWoS). These strategies are nothing new for AMD. The corporate was arguably accountable for popularizing the method in its Epyc CPUs utilizing a number of smaller, greater yielding dies right into a single logical unit.

Ranging from the highest and dealing our method down, two GPU chiplets (XCDs) are stacked atop every of the 4 I/O dies utilizing a way just like the one utilized in AMD’s X-chips to connect extra SRAM. The I/O dies are then bonded to a silicon interposer linked to the natural substrate utilizing TSMC CoWoS.

Similar to on Epyc, reminiscence is linked to the MI300’s I/O die quite than the CPU itself. However, as a substitute of your typical DDR5 reminiscence controllers going out to a few DIMMs on the motherboard, every HBM3 stack is hooked up utilizing TSMC’s 2.5D packaging tech for optimum bandwidth.

AMD Intuition MI300-series accelerators use a mixture of two.5D and three.5D packaging to create a dense compute sandwich. – Click on to enlarge

AMD’s APU, the MI300A, takes this components to new excessive by swapping out two of the CDNA 3 GPU chiplets in favor of a trio of CPU dies totaling 24 Zen 4 cores and carrying 128GB of HBM3 reminiscence.

And For those who’re questioning, these are the identical CCDs discovered on AMD’s Epyc 4 chips launched late final autumn. Early renderings of the MI300A had us puzzled as to whether or not they had been utilizing a customized CPU chiplet or not.

APUs — what AMD has lengthy referred to as chips that that includes each CPU and GPU cores — have been a key part of AMD’s desktop and cellular processor households for years. The MI300A marks the primary time AMD has utilized this strategy to a datacenter chip and in contrast to most APUs we have seen from the Home of Zen so far, this one is not monolithic and is aimed solidly on the HPC market.

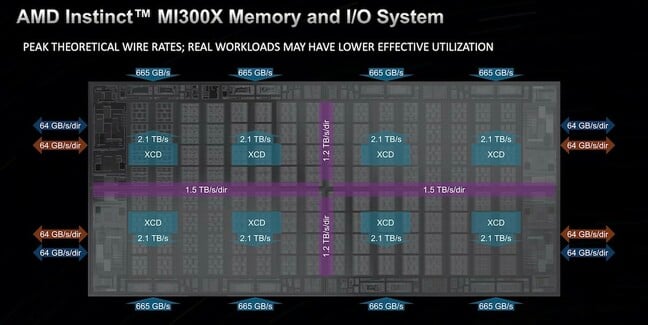

The good thing about this compute and IO sandwich is extraordinarily excessive efficiency communication between the dies and reminiscence. Because the chart beneath illustrates, two HBM3 stacks ship 1.33TB/s of bandwidth to every of the 4 IODs, which may talk with one another at between 1.2TB/s and 1.5TB/s in every route. This enables every IOD to feed as much as two GPU tiles at 2.1TB/s every.

AMD is pushing some critical bandwidth between the MI300’s chiplets with bandwidth measured in terabytes per second. – Click on to enlarge

In reality, the largest bottleneck in your complete structure is for knowledge going off package deal. The 4 I/O dies present a complete of 1Tb/s of bandwidth — 512 GB/s in both route — for communications between accelerators, hosts, or peripherals relying on the configuration. In line with AMD, this permits its Mi300A APU to assist 4 socket configurations whereas the MI300X GPU will assist provider boards with as much as eight sockets.

Cramming all of that efficiency into such a dense package deal does come at the price of greater energy consumption, nevertheless it’s not practically as dangerous as we might feared. Preliminary efficiency disclosures advised the chips may eat as a lot as 850W. As an alternative, the MI300X is rated for 750W, about 150W greater than its predecessor, however simply 50W greater than the H100. The MI300A, in the meantime, seems to have a variable TDP starting from 550W to 760W.

The aggressive panorama

AMD is hardly the primary to speak about constructing datacenter APUs. Intel’s Falcon Shores was initially envisioned as an XPU — due to course Chipzilla cannot simply name it an APU — with a versatile association of CPU and GPU cores and tiles.

Nevertheless, with the cancellation of Ponte Vecchio’s successor Rialto Bridge, Intel deserted the idea, with Jeff McVeigh, VP of Intel’s supercomputing group, arguing on the time that the “push to combine a CPU and GPU into an XPU was untimely.” Falcon Shores has since been reimagined as a normal GPU integrating IP from Inte’s Habana AI division and is due out in 2025.

Then there’s Nvidia’s Grace-Hopper Superchip which pairs an Arm processor with 72 Neoverse V2 cores with an H100 GPU die, 512GB of LPDDR5x reminiscence, and both 96GB of HBM3 or 144GB of HBM3e.

Whereas it could be tempting to attract comparisons between that chip, now referred to as the GH200, to AMD’s MI300A, there are a few notable variations value stating.

The way in which the 2 chips CPUs and GPUs are interconnected could be very completely different. Slightly than the silicon-to-silicon die stacking we see on the MI300-series elements, Nvidia’s GH200 makes use of its 900GB/s NVLink-C2C interconnect to bridge the 2 chips. Whereas NVLink is quick, it is not even near the TB/s of bandwidth AMD is pushing by its dies on MI300. With that mentioned, for off-package communications, NVLink continues to be a hair quicker at 900 GB/s vs the 896GB/s enabled by the MI300-series elements.

One other key distinction pertains to reminiscence domains. Nvidia’s GH200 makes use of DRAM for the CPU and HBM for the GPU. This gives the benefit of capability, knowledge nonetheless must be copied from one to the opposite earlier than it may be processed. The MI300A, then again, shares its reminiscence between the CPU and GPU seamlessly. Each processors can learn, write, and function on the identical pool of HBM eliminating the necessity for expensive copy operations.

The price of this strategy, as we have already explored, is complexity. MI300 is indisputably a extra difficult chip, not less than by way of packaging. Nvidia’s GH200 is less complicated, however doubtlessly much less environment friendly with regards to knowledge motion. Which strategy is inherently higher is not clear, and can seemingly depend upon the scenario the {hardware} is used for.

Wanting on the broader GPU and AI accelerator market MI300X should compete with Nvidia’s lately introduced H200 GPU, which presents greater reminiscence bandwidth than the H100 because of the shift to quicker HBM3e equipment. In line with Nvidia’s roadmap, that chip can be a placeholder for Nvidia’s subsequent technology B100 popping out in 2024.

The MI300X will even compete with Intel’s long-awaited Gaudi 3 AI accelerator in 2024. Whereas particulars are skinny, Intel claims it can ship a 4x enchancment in mind float 16 efficiency and 50 % improve in reminiscence capability and bandwidth.

Availability and deployment

On the subject of availability, each APU and GPU variations of the MI300 are already making their method out to clients. Among the earliest adopters are Microsoft, Oracle, and the DoE’s Lawrence Livermore Nationwide Labs.

Final month, Microsoft Azure introduced a MI300X powered VM. These situations boast eight accelerators totaling 1.5TB of HBM3 reminiscence backed by 400Gb/s Infiniband networking to every of the playing cards.

Oracle will even make AMD’s MI300X out there to lease alongside its huge cluster of Nvidia A100 and H100 accelerators.

On the HPC facet, work on Lawrence Livermore Nationwide Lab’s MI300A-powered El Capitan supercomputer is already beneath method. The system, which our sibling website The Subsequent Platform took an early take a look at in June, is because of come on-line later subsequent yr guarantees 2 exaFLOPS of peak efficiency.

Given the intense demand for AI accelerators and the superior packaging tech concerned in these chips, the capability for which is already constrained, we count on it could be troublesome to get your palms on both the MI300A or X varients except you are a authorities company or hyperscale cloud supplier.

Nonetheless, a number of main OEMs have introduced their assist for the chips, together with, Hewlett Packard Enterprise, Lenovo, Supermicro, Eviden, Gigabyte and others. ®

[ad_2]