[ad_1]

Abstract: Researchers developed a robotic sensor utilizing synthetic intelligence to learn braille at a outstanding 315 phrases per minute with 87% accuracy, surpassing the typical human studying pace. This sensor employs machine studying algorithms to interpret braille with excessive sensitivity, mirroring human-like studying conduct.

Although not designed as assistive know-how, this breakthrough has implications for growing delicate robotic arms and prosthetics. The sensor’s success in studying braille demonstrates the potential of robotics in replicating complicated human tactile abilities.

Key Info:

- The robotic braille reader makes use of AI and a camera-equipped ‘fingertip’ sensor to learn at double the pace of most human readers.

- The robotic’s excessive sensitivity makes it a great mannequin for growing superior robotic arms or prosthetics.

- This development challenges the engineering activity of replicating human fingertip sensitivity in robotics, providing broader purposes past studying braille.

Supply: College of Cambridge

Researchers have developed a robotic sensor that includes synthetic intelligence methods to learn braille at speeds roughly double that of most human readers.

The analysis staff, from the College of Cambridge, used machine studying algorithms to show a robotic sensor to rapidly slide over strains of braille textual content. The robotic was in a position to learn the braille at 315 phrases per minute at near 90% accuracy.

Though the robotic braille reader was not developed as an assistive know-how, the researchers say the excessive sensitivity required to learn braille makes it a great take a look at within the growth of robotic arms or prosthetics with comparable sensitivity to human fingertips.

The outcomes are reported within the journal IEEE Robotics and Automation Letters.

Human fingertips are remarkably delicate and assist us collect details about the world round us. Our fingertips can detect tiny adjustments within the texture of a fabric or assist us know the way a lot pressure to make use of when greedy an object: for instance, choosing up an egg with out breaking it or a bowling ball with out dropping it.

Reproducing that degree of sensitivity in a robotic hand, in an energy-efficient method, is an enormous engineering problem. In Professor Fumiya Iida’s lab in Cambridge’s Division of Engineering, researchers are growing options to this and different abilities that people discover straightforward, however robots discover troublesome.

“The softness of human fingertips is among the causes we’re in a position to grip issues with the correct quantity of strain,” mentioned Parth Potdar from Cambridge’s Division of Engineering and an undergraduate at Pembroke Faculty, the paper’s first writer. “For robotics, softness is a helpful attribute, however you additionally want a number of sensor info, and it’s difficult to have each directly, particularly when coping with versatile or deformable surfaces.”

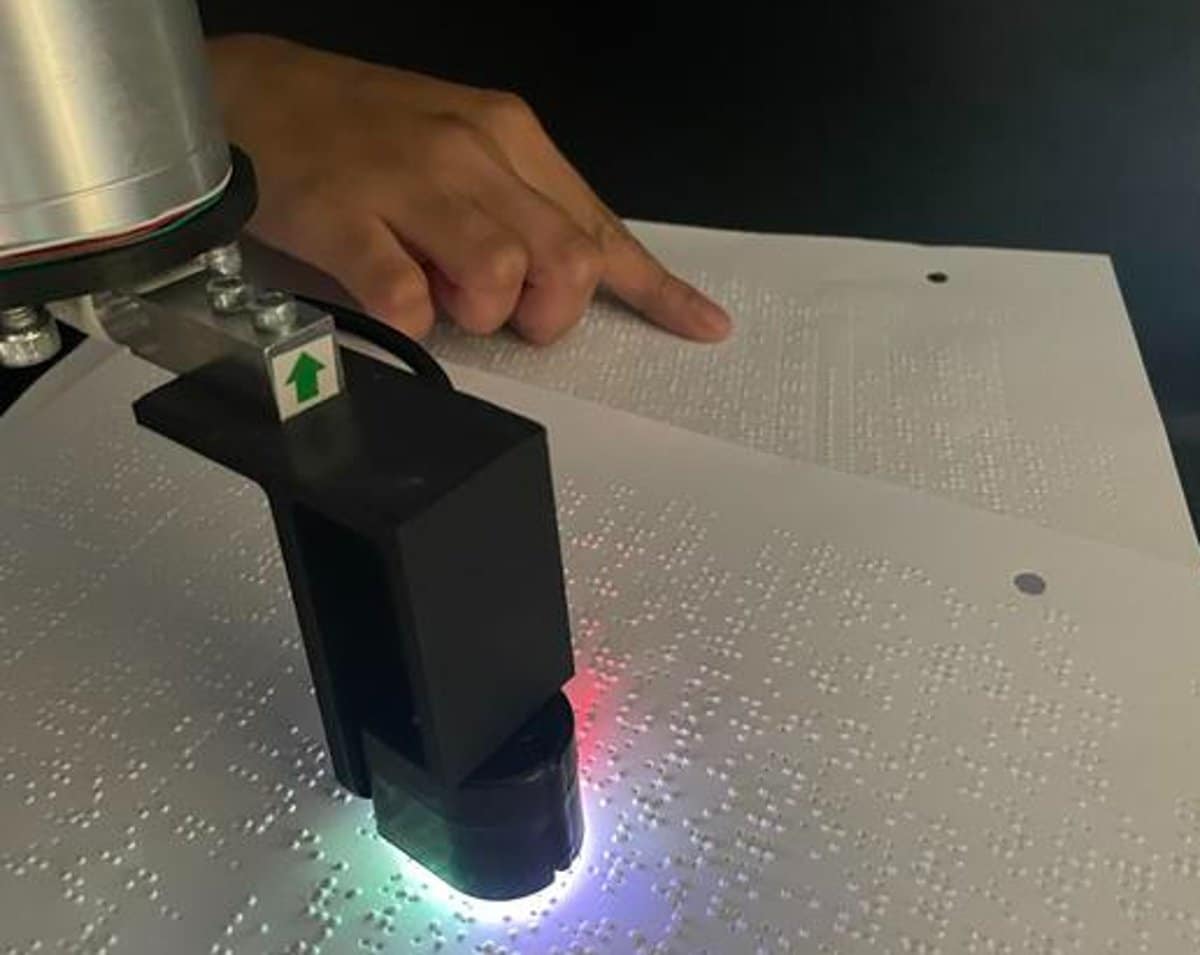

Braille is a perfect take a look at for a robotic ‘fingertip’ as studying it requires excessive sensitivity, because the dots in every consultant letter sample are so shut collectively. The researchers used an off-the-shelf sensor to develop a robotic braille reader that extra precisely replicates human studying behaviour.

“There are current robotic braille readers, however they solely learn one letter at a time, which isn’t how people learn,” mentioned co-author David Hardman, additionally from the Division of Engineering.

“Present robotic braille readers work in a static method: they contact one letter sample, learn it, pull up from the floor, transfer over, decrease onto the following letter sample, and so forth. We would like one thing that’s extra life like and way more environment friendly.”

The robotic sensor the researchers used has a digicam in its ‘fingertip’, and reads through the use of a mixture of the data from the digicam and the sensors. “It is a arduous drawback for roboticists as there’s numerous picture processing that must be performed to take away movement blur, which is time and energy-consuming,” mentioned Potdar.

The staff developed machine studying algorithms so the robotic reader would be capable to ‘deblur’ the pictures earlier than the sensor tried to recognise the letters. They educated the algorithm on a set of sharp photographs of braille with faux blur utilized. After the algorithm had realized to deblur the letters, they used a pc imaginative and prescient mannequin to detect and classify every character.

As soon as the algorithms have been integrated, the researchers examined their reader by sliding it rapidly alongside rows of braille characters. The robotic braille reader might learn at 315 phrases per minute at 87% accuracy, which is twice as quick and about as correct as a human Braille reader.

“Contemplating that we used faux blur the prepare the algorithm, it was stunning how correct it was at studying braille,” mentioned Hardman. “We discovered a pleasant trade-off between pace and accuracy, which can be the case with human readers.”

“Braille studying pace is an effective way to measure the dynamic efficiency of tactile sensing methods, so our findings could possibly be relevant past braille, for purposes like detecting floor textures or slippage in robotic manipulation,” mentioned Potdar.

In future, the researchers are hoping to scale the know-how to the dimensions of a humanoid hand or pores and skin.

Funding: The analysis was supported partly by the Samsung International Analysis Outreach Program.

About this AI and robotics analysis information

Writer: Sarah Collins

Supply: College of Cambridge

Contact: Sarah Collins – College of Cambridge

Picture: The picture is credited to Neuroscience Information

Unique Analysis: Closed entry.

“Excessive-Pace Tactile Braille Studying by way of Biomimetic Sliding Interactions” by Parth Potdar et al. IEEE Robotics and Automation Letters

Summary

Excessive-Pace Tactile Braille Studying by way of Biomimetic Sliding Interactions

Most braille-reading robotic sensors make use of a discrete letter-by-letter studying technique, regardless of the upper potential speeds of a biomimetic sliding strategy.

We suggest an entire pipeline for steady braille studying: frames are dynamically collected with a vision-based tactile sensor; an autoencoder removes motion-blurring artefacts; a light-weight YOLO v8 mannequin classifies the braille characters; and a data-driven consolidation stage minimizes errors within the predicted string.

We show a state-of-the-art pace of 315 phrases per minute at 87.5% accuracy, greater than twice the pace of human braille studying.

While demonstrated on braille, this biomimetic sliding strategy may be additional employed for richer dynamic spatial and temporal detection of floor textures, and we think about the challenges which should be addressed in its growth.

[ad_2]